“The Secret Service should start recruiting through Mumsnet to attract more women to senior posts, MPs have said.”

[SkyNews, March 5, 2015]

Whilst we may have always dreamed of being ‘M’, perhaps we can start by empowering all Mums to understand how real-life surveillance works today, in all our lives, homes and schools.

In the words of Franklin D. Roosevelt at his 1933 inaugural address:

“This is preeminently the time to speak the truth, the whole truth, frankly and boldly…

“Let me assert my firm belief that the only thing we have to fear is fear itself—nameless, unreasoning, unjustified terror which paralyzes needed efforts to convert retreat into advance.”

It is hard to know in the debate in the ‘war on terror’, what is truthful and what is ‘justified’ fear as opposed to ‘nameless and unreasoning.’

To be reasoned, we need to have information to understand what is going on and it can feel that picture is complex and unclear.

What concrete facts do you and I have about terrorism today, and the wider effects it has on our home life?

If you have children in school, or are a journalist, a whistleblower, lawyer or have thought about the effects of the news recently, it may affect our children or any of us in ways which we may not expect.

It might surprise you that it was surveillance law that was used to track a mother and her children’s [1] movements when a council wasn’t sure if her school application was for the correct catchment area. [It legally used the Regulation of Investigatory Powers Act 2000, (RIPA) [2]

Recent headlines are filled with the story of three more girls who are reported to have travelled to Syria.

As a Mum I’d be desperate for my teens, and I cannot imagine what their family must feel. There are conflicting opinions, and politics, but let’s leave that aside. These girls are each somebody’s daughter, and at risk.

As a result MPs are talking about what they should be teaching in schools. Do parents and citizens agree, and do we know what?

Shadow business secretary Chuka Umunna, Labour MP told Pienaar’s Politics on BBC Radio 5 Live: “I really do think this is not just an issue for the intelligence services, it’s for all of us in our schools, in our communities, in our families to tackle this.”

Justice Minister Simon Hughes told Murnaghan on Sky News it was important to ensure a counter-argument against extremism was being made in schools and also to show pupils “that’s not where excitement and success should lie”. [BBC 22 February 2015]

There are already policies in schools that touch all our children and laws which reach into our family lives that we may know little about.

I have lots of questions what and how we are teaching our children about ‘extremism’ in schools and how the state uses surveillance to monitor our children’s and our own lives.

This may affect all schools and places of education, not those about which we hear stories about in the news, so it includes yours.

We all want the best for our young people and security in society, but are we protecting and promoting the right things?

Are today’s policies in practice, helping or hardening our children’s thinking?

Of course I want to see that all our kids are brought up safe. I also want to bring them up free from prejudice and see they get equal treatment and an equal start in life in a fair and friendly society.

I think we should understand the big picture better.

1. Do you feel comfortable that you know what is being taught in schools or what is done with information recorded or shared by schools or its proposed expansion to pre-schools about toddlers under the Prevent programme?.

2. Do government communications’ surveillance programmes in reality, match up with real world evidence of need, and how is it measured to be effective?

3. Do these programmes create more problems as side-effects we don’t see or don’t measure?

4. If any of our children have information recorded about them in these programmes, how is it used, who sees it and for what purposes?

5. How much do we know about the laws brought in under the banner of ‘counter-terror’ measures, and how they are used for all citizens in everyday life?

We always think unexpected things will happen to someone else, and everything is rightfully justified in surveillance, until it isn’t.

Labels can be misleading.

One man’s terrorist may be another’s freedom fighter.

One man’s investigative journalist is another’s ‘domestic extremist.’

Who decides who is who?

Has anyone asked in Parliament: Why has religious hate crime escalated by 45% in 2013/14 and what are we doing about it? (up 700 to 2, 273 offences, Crime figures [19])

These aren’t easy questions, but we shouldn’t avoid asking them because it’s difficult.

I think we should ask: do we have laws which discriminate by religion, censor our young people’s education, or store information about us which is used in ways we don’t expect or know about?

Our MPs are after all, only people like us, who represent us, and who make decisions about us, which affect us. And on 7th May, they may be about to change.

As a mother, whoever wins the next General Election matters to me because it will affect the next five years or more, of what policies are made which will affect our children, and all of us as citizens.

It should be clear what these programmes are and there should be no reason why it’s not transparent.

“To counter terrorism, society needs more than labels and laws. We need trust in authority and in each other.”

We need trust in authority and in each other in our society, built on a strong and simple legal framework and founded on facts, not fears.

So I think this should be an election issue. What does each party plan on surveillance to resolve the issues outlined by journalists, lawyers and civil society? What applied programmes does each party see that will be, in practical terms: “for all of us in our schools, in our communities, in our families to tackle this.”

If you agree, then you could ask your MP, and ask your prospective parliamentary candidates. What is already done in real life and what are their future policies?

Let’s understand ‘the war on terror’ at home better, and its real impacts. These laws and programmes should be transparent, easy to understand, and not only legal, but clearly just, and proportionate.

Let’s get back to some of the basics, and respect the rights of our children.

Let’s start to untangle this spaghetti of laws; the programmes, that affect us in practice; and understand their measures of success.

Solutions to protecting our children, are neither simple or short term. But they may not always mean more surveillance.

Whether the Secret Service will start recruiting through Mumsnet or not, we could start with better education of us all.

At very least, we should understand what ‘surveillance’ means.

****

If you want to know more detail, I look at this below.

The laws applied in Real Life

Have you ever looked at case studies of how surveillance law is used?

In one case, a mother and her children’s [1] movements were watched and tracked when a council wasn’t sure if her school application was for the correct catchment area. [It legally used the Regulation of Investigatory Powers Act 2000, (RIPA) [2]

Do you think it is just or fair that a lawyer’s conversations with his client [3] were recorded and may have been used preparing the trial – when the basis of our justice system is innocent until proven guilty?

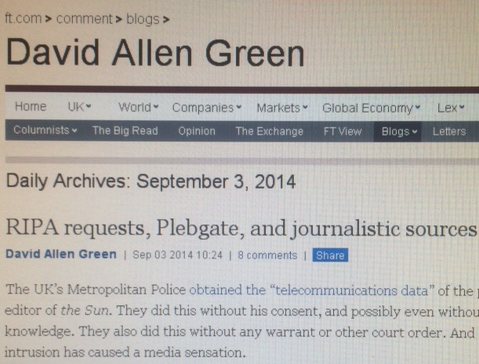

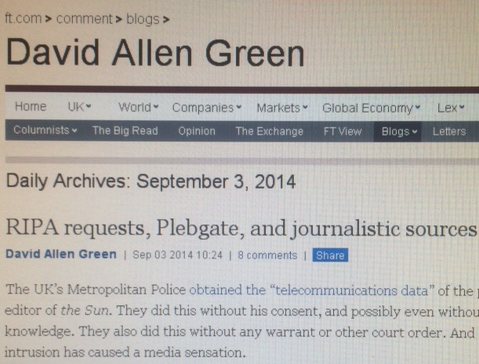

Or is it right that journalists’ phone records could be used to identify people by the police, without telling the journalists or getting independent approval, from a judge for example?

These aren’t theoretical questions but stem from real-life uses of laws used in the ‘counter terrorism’ political arena and in practice.

Further programmes store information about every day people which we may find surprising.

In November 2014 it was reported that six British journalists [4] had found out personal and professionally related information had been collected about them, and was stored on the ‘domestic extremist’ database by the Metropolitan Police in London.

They were not criminal nor under surveillance for any wrongdoing.

One of the journalists wrote in response in a blog post on the NUJ website [5]:

“…the police have monitored public interest investigations in my case since 1999. More importantly if the police are keeping tabs on a lightweight like myself then they are doing the same and more to others?”

Ever participated in a protest and if not reported on one?

‘Others’ in that ‘domestic extremist list’ might include you, or me.

Current laws may be about to change [6] (again) and perhaps for the good, but will yet more rushed legislation in this area be done right?

There are questions over the detail and what will actually change. There are multiple bills affecting security, counter-terrorism and data access in parliament, panels and reviews going on in parallel.

The background which has led to this is the culmination of lots of concern and pressure over a long period of time focuses on one set of legal rules, in the the Regulation of Investigatory Powers Act (RIPA).

The latest draft code of practice [7] for the Regulation of Investigatory Powers Act (RIPA) [8] allows the police and other authorities to continue to access journalists’ and other professionals’ communications without any independent process or oversight.

‘Nothing to hide, nothing to fear’, is a phrase we hear said of surveillance but as these examples show, its use is widespread and often unexpected, not in extremes as we are often told.

David Cameron most recently called for ever wider surveillance legislation, again in The Telegraph, Jan 12 2015 saying:[9]

“That is why in extremis it has been possible to read someone’s letter, to listen to someone’s telephone, to mobile communications.”

Laws and programmes enable and permit these kinds of activity which are not transparent to the broad public. Is that right?

The Deregulation bill has changes, which appear now to have been amended to keep the changes affecting journalists in PACE [10] laws after all, but what effects are there for other professions and how exactly will this change interact with further new laws such as the Counter Terrorism and Security Act [p20]? [11]

It’s understandable that politicians are afraid of doing nothing, if a terrorist attack takes place, they are at risk of looking like they failed.

But it appears that politicians may have got themselves so keen to be seen to be doing ‘something’ in the face of terror attacks, that they are doing too much, in the wrong places, and we have ended up with a legislative spaghetti of simultaneous changes, with no end in sight.

It’s certainly no way to make legal changes understandable to the British public.

Political change may come as a result of the General Election. What implications will it have for the applied ‘war-on-terror’ and average citizen’s experience of surveillance programmes in real life?

What do we know about how we are affected? The harm to some in society is real, and is clearly felt in some, if not all communities. [12]

Where is the evidence to include in the debate, how laws affect us in real life and what difference they make vs their intentions?

Anti-terror programmes in practice; in schools & surgeries

In addition to these changes in law, there are a number of programmes in place at the moment.

The Prevent programme?[16] I already mentioned above.

Its expansion to wider settings would include our children from age 2 and up, who will be under an additional level of scrutiny and surveillance [criticism of the the proposal has come across the UK].

How might what a three year old says or draws be interpreted, or recorded them about them, or their family? Who accesses that data?

What film material is being produced that is: ” distributed directly by these organisations, with only a small portion directly badged with government involvement” and who is shown it and why? [Review of Australia‘s Counter Terror Machinery, February 2015] [17]

What if it’s my child who has something recorded about them under ‘Prevent’? Will I be told? Who will see that information? What do I do if I disagree with something said or stored about them?

Does surveillance benefit society or make parts of it feel alienated and how are both its intangible cost and benefit measured?

When you combine these kinds of opaque, embedded programmes in education or social care with political thinking which could appear to be based on prejudice not fact [18], the outcomes could be unexpected and reminiscent of 1930s anti-religious laws.

Baroness Hamwee raised this concern in the Lords on the 28th January, 2015 on the Prevent Programme:

“I am told that freedom of information requests for basic statistics about Prevent are routinely denied on the basis of national security. It seems to me that we should be looking for ways of providing information that do not endanger security.

“For instance, I wondered how many individuals are in a programme because of anti-Semitic violence. Over the last day or two, I have been pondering what it would look like if one substituted “Jewish” for “Muslim” in the briefings and descriptions we have had.” Baroness Hamwee: [28 Jan 2015 : Column 267 -11]

“It has been put to me that Prevent is regarded as a security prism through which all Muslims are seen and that Muslims are suspect until proved otherwise. The term “siege mentality” has also been used.

“We have discussed the dangers of alienation arising from the very activities that should be part of the solution, not part of the problem, and of alienation feeding violence. […]

“Transparency is a very important tool … to counter those concerns.”

Throughout history good and bad are dependent on your point of view. In 70s London, but assuming today’s technology, would all Catholics have come sweepingly under this extra scrutiny?

“Early education funding regulations have been amended to ensure that providers who fail to promote the fundamental British values of democracy, the rule of law, individual liberty and mutual respect and tolerance for those with different faiths and beliefs do not receive funding.” [

consultation guidance Dec 2014]

The programme’s own values seem undermined by its attitudes to religion and individual liberty. On universities the same paragraph on ‘freedom of speech’ suggests restrictive planning measures on protest meetings and IT surveillance for material accessed for ‘non-research purposes’.

School and university is a time when our young people explore all sorts of ideas, including to be able to understand and to criticise them. Just looking at material online should not necessarily have any implications. Do we really want to censor what our young people should and should not think about, and who is deciding the criteria?

For families affected by violence, nothing can justify their loss and we may want to do anything to justify its prevention.

But are we seeing widespread harm in society as side effects of surveillance programmes?

We may think we live in a free and modern society. History tells us all too easily governments can change a slide into previously unthinkable directions. It would be complacent to think, ‘it couldn’t happen here.’

Don’t forget, religious hate crime escalated by 45% in 2013/14 Crime figures [19])

Writers self-censor their work. Whistleblowers may not come forward to speak to journalists if they feel actively watched.

Terrorism is not new.

Young people with fervour to do something for a cause and going off ‘to the fight’ in a foreign country is not new.

In the 1930s the UK Government made it illegal to volunteer to fight in Spain in the civil war, but over 2,000 went anyway.

New laws are not always solutions. especially when ever stricter surveillance laws, may still not mean any better accuracy of terror prevention on the ground. [As Charlie Hebdo and Copenhagen showed. in these cases the people involved were known to police. In the case of Lee Rigby it was even more complex.]

How about improving our citizens’ education and transparency about what’s going on & why, based on fact and not fear?

If the state shouldn’t nanny us, then it must allow citizens and parents the transparency and understanding of the current reality, to be able to inform ourselves and our children in practical ways, and know if we are being snooped on or surveillance recorded.

There is an important role for cyber experts in/and civil society to educate and challenge MPs on policy. There is also a very big gap in practical knowledge for the public, which should be addressed.

Can we trust that information will be kept confidential that I discuss with my doctor or lawyer or if I come forward as a whistleblower?

Do I know whether my email and telephone conversations, or social media interactions are being watched, actively or by algorithms?

Do we trust that we are treating all our young people equally and without prejudice and how are we measuring impact of programmes we impose on them?

To counter terrorism, society needs more than labels and laws

We need trust in authority and in each other in our society, built on a strong and simple legal framework and founded on facts, not fears.

If the Prevent programme is truly needed at this scale, tell us why and tell us all what our children are being told in these programmes.

We should ask our MPs even though consultation is closed, what is the evidence behind the thinking about getting prevent into toddler settings and far more? What risks and benefits have been assessed for any of our children and families who might be affected?

Do these efforts need expanded to include two-year-olds?

Are all efforts to keep our kids and society safe equally effective and proportionate to potential and actual harm caused?

Alistair MacDonald QC, chairman of the Bar Council, said:

‘As a caring society, we cannot simply leave surveillance issues to senior officers of the police and the security services acting purportedly under mere codes of practice.

What is surely needed more than ever before is a rigorous statutory framework under which surveillance is authorised and conducted.”

Whether we are disabled PIP protesters outside parliament or mothers on the school run, journalists or lawyers, doctors or teachers, or anyone, these changes in law or lack of them, may affect us. Baroness Hamwee clearly sees harm caused in the community.

Development of a future legislative framework should reflect public consensus, as well as the expert views of technologists, jurists, academics and civil liberty groups.

What don’t we know? and what can we do?

According to an Ipsos MORI poll for the Evening Standard on October 2014 [20] only one in five people think the police should be free to trawl through the phone records of journalists to identify their sources.

Sixty-seven per cent said the approval of a judge should be obtained before such powers are used.

No one has asked the public if we think the Prevent programme is appropriate or proportionate as far as I recall?

Who watches that actions taken under it, are reasonable and not reactionary?

We really should be asking; what are our kids being shown, taught, informed about or how they may be informed upon?

I’d like all of that in the public domain, for all parents and guardians. The curriculum, who is teaching and what materials are used.

It’s common sense to see that young people who feel isolated or defensive are less likely to talk to parents about their concerns.

It is a well known quote in surveillance “Nothing to hide, nothing to fear.” But this argument is flawed, because information can be wrong.

‘Nothing to fear, nowhere to hide’, may become an alternative meme we hear debated again soon, about surveillance if the internet and all communications are routinely tracked, without oversight.

To ensure proper judicial oversight in all these laws and processes – to have an independent judge give an extra layer of approval – would restore public trust in this system and the authority on which it depends.

It could pave the way for a new hope of restoring the checks and balances in many governance procedures, which a just and democratic society deserves.

As Roosevelt said: “let me assert my firm belief that the only thing we have to fear is fear itself—nameless, unreasoning, unjustified terror.”

******

[On Channel4OD: Channel 4 – Oscar winning, ‘CitizenFour’ Snowden documentary]

References:

[1] The Guardian, 2008, council spies on school applicants

[2] Wikipedia RIPA legislation

[3] UK admits unlawfully monitoring communications

[4] http://www.theguardian.com/uk-news/2014/nov/20/police-legal-action-snooping-journalists

[5] Journalist’s response

[6] SOS Campaign

[7] RIPA Consultation

[8] The RIPA documents are directly accessible here

[9] The Telegraph

[10] Deregulation Bill

[11] Counter Terrorism and Security Act 2015

[12] Baroness Hamwee comments in the House of Lords [Hansard]

[13] Consultation response by charity Children in Scotland

[14] The Telegraph, Anti-terror plan to spy on toddlers ‘is heavy-handed’

[15] GPs told to specify counter terrorism leads [Prevent]

[16] The Prevent programme, BBC / 2009 Prevent programme for schools

[17] Review of Australia’s CT Machinery

[18] Boris Johnson, March 2014

[19] Hate crime figures 2013-14

[20] Ipsos MORI poll, October 2014

******

image credit: ancient history

MORE: click the link below

Continue reading Nothing to fear, nowhere to hide – a mother’s attempt to untangle UK surveillance law and programmes →