This follows on from: 1. Digital revolution by design: building for change and people.

***

Talking about the future of digital health in the NHS, Andy Williams went on to ask, what makes the Internet work?

In my head I answered him, freedom.

Freedom from geographical boundaries. Freedom of speech to share ideas and knowledge in real time with people around the world. The freedom to fair and equal use. Cooperation, creativity, generosity…

Where these freedoms do not exist or are regulated the Internet may not work well for its citizens and its potential is restricted, as well as its risks.

But the answer he gave, was standards.

And of course he was right. Agreed standards are needed when sharing a global system so that users, their content and how it works behind the screen cooperate and function as intended.

I came away wondering what the digital future embodied in the NHS NIB plans will look like, who has their say in its content and design and who will control it?

What freedoms and what standards will be agreed upon for the NHS ‘digital future’ to function and to what purpose?

Citizens help shape the digital future as we help define the framework of how our data are to be collected and used, through what public feeling suggests is acceptable and people actually use.

What are some of the expectations the public have and what potential barriers exist to block achieving its benefits?

It’s all too easy when discussing the digital future of the NHS to see it as a destination. Perhaps we could shift the conversation focus to people, and consider what tools digital will offer the public on their life journey, and how those tools will be driven and guided.

Expectations

One key public expectation will be of trust, if something digital is offered under the NHS brand, it must be of the rigorous standard we expect.

Is every app a safe, useful tool or fun experiment and how will users [especially for mental health apps where the outcomes may be less tangibly measured than say, blood glucose] know the difference?

A second expectation must be around universal equality of access.

A third expectation must be that people know once the app is downloaded or enrolment done, what they have signed up to.

Will the NHS England / NIB digital plans underway create or enable these barriers and expectations?

What barriers exist to the NHS digital vision and why?

Is safety regulation a barrier to innovation?

The ability to broadly share innovation at speed is one of the greatest strengths of digital development, but can also risk spreading harm quickly. Risk management needs to be upfront.

We assume that digital designs will put at their heart the core principles in the spirit of the NHS. But if apps are not available on prescription and are essentially a commercial product with no proven benefit, does that exploit the NHS brand trust?

Regulation of quality and safety must be paramount, or they risk doing harm as any other treatment could to the person and regulation must further consider reputational risk to the NHS and the app providers.

Regulation shouldn’t be seen as a barrier, but as an enabler to protect and benefit both user and producer, and indirectly the NHS and state.

Once safety regulation is achieved, I hope that spreading benefits will not be undermined by creating artificial boundaries that restrict access to the tools by affordability, in a postcode lottery, or in language.

But are barriers being built by design in the NHS digital future?

Cost: commercial digital exploitation or digital exclusion?

There appear to be barriers being built by design into the current NHS apps digital framework. The first being cost.

For the poorest even in the UK today in maternity care, exclusion is already measurable in those who can and cannot afford the data allowance it costs on a smart phone for e-red book access, attendees were told by its founder at #kfdigital15.

Is digital participation and its resultant knowledge or benefit to become a privilege reserved for those who can afford it? No longer free at the point of service?

I find it disappointing that for all the talk of digital equality, apps are for sale on the NHS England website and many state they may not be available in your area – a two-tier NHS by design. If it’s an NHS app, surely it should be available on prescription and/or be free at the point of use and for all like any other treatment? Or is yet another example of NHS postcode lottery care?

There are tonnes of health apps on the market which may not have much proven health benefit, but they may sell well anyway.

I hope that decision makers shaping these frameworks and social contracts in health today are also looking beyond the worried well, who may be the wealthiest and can afford apps leaving the needs of those who can’t afford to pay for them behind.

At home, it is some of the least wealthy who need the most intervention and from whom there may be little profit to be made There is little in 2020 plans I can see that focuses on the most vulnerable, those in prison and IRCs, and those with disabilities.

Regulation in addition to striving for quality and safety by design, can ensure there is no commercial exploitation of purchasers. However it is a question of principle that will decide for or against exclusion for users based on affordability.

Geography: crossing language, culture and country barriers

And what about our place in the wider community, the world wide web, as Andy Williams talked about: what makes the Internet work?

I’d like to think that governance and any “kite marking” of digital tools such as apps, will consider this and look beyond our bubble.

What we create and post online will be on the world wide web. That has great potential benefits and has risks.

I feel that in the navel gazing focus on our Treasury deficit, the ‘European question’ and refusing refugees, the UK government’s own insularity is a barrier to our wider economic and social growth.

At the King’s Fund event and at the NIB meeting the UK NHS leadership did not discuss one of the greatest strengths of online.

Online can cross geographical boundaries.

How are NHS England approved apps going to account for geography and language and cross country regulation?

What geographical and cultural barriers to access are being built by design just through lack of thought into the new digital framework?

Barriers that will restrict access and benefits both in certain communities within the UK, and to the UK.

One of the three questions asked at the end of the NIB session, was how the UK Sikh community can be better digitally catered for.

In other parts of the world both traditional and digital access to knowledge are denied to those who cannot afford it.

This photo reportedly from Indonesia, is great [via Banksy on Twitter, and apologies I cannot credit the photographer] two boys on the way to school, pass their peers on their way to work.

I wonder if one of these boys has the capability to find the cure for cancer?

What if he is one of the five, not one of the two?

Will we enable the digital infrastructure we build today to help global citizens access knowledge and benefits, or restrict access?

Will we enable broad digital inclusion by design?

And what of data sharing restrictions: Barrier or Enabler?

Organisations that talk only of legal, ethical or consent ‘barriers’ to datasharing don’t understand human behaviour well enough.

One of the greatest risks to achieving the potential benefits from data is the damage done to it by organisations that are paternalistic and controlling. They exploit a relationship rather than nurturing it.

The data trust deficit from the Royal Statistical Society has lessons for policymakers. Including finding that: “Health records being sold to private healthcare companies to make money for government prompted the greatest opposition (84%).”

Data are not an abstract to be exploited, but personal information. Unless otherwise informed, people expect that information offered for one purpose, will not be used for another. Commercial misuse is the greatest threat to public trust.

Organisations that believe behavioural barriers to data sharing are an obstacle, have forgotten that trust is not something to be overcome, but to be won and continuously reviewed and protected.

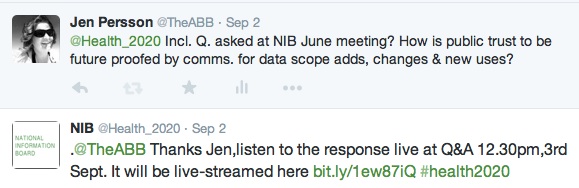

The known barrier without a solution is the lack of engagement that is fostered where there is a lack of respect for the citizen behind the data. A consensual data charter could help to enable a way forward.

Where is the wisdom we have lost in knowledge?

Once an app is [prescribed[, used, data exchanged with the NHS health provider and/or app designer, how will users know that what they agreed to in an in-store app, does not change over time?

How will ethical guidance be built into the purposes of any digital offerings we see approved and promoted in the NHS digital future?

When the recent social media experiment by Facebook only mentioned the use of data for research after the experiment, it caused outcry.

It crossed the line between what people felt acceptable and intrusive, analysing the change in behaviour that Facebook’s intervention caused.

That this manipulation is not only possible but could go unseen, are both a risk and cause for concern in a digital world.

Large digital platforms, even small apps have the power to drive not only consumer, but potentially social and political decision making.

“Where is the knowledge we have lost in information?” asks the words of T S Elliott in Choruses, from the Rock. “However you disguise it, this thing does not change: The perpetual struggle of Good and Evil.”

Knowledge can be applied to make a change to current behaviour, and offer or restrict choices through algorithmic selection. It can be used for good or for evil.

‘Don’t be evil’ Google’s adoptive mantra is not just some silly slogan.

Knowledge is power. How that power is shared or withheld from citizens matters not only today’s projects, but for the whole future digital is helping create. Online and offline. At home and abroad.

What freedoms and what standards will be agreed upon for it to function and to what purpose? What barriers can we avoid?

When designing for the future I’d like to see discussion consider not only the patient need, and potential benefits, but also the potential risk for exploitation and behavioural change the digital solution may offer. Plus, ethical solutions to be found for equality of access.

Regulation and principles can be designed to enable success and benefits, not viewed as barriers to be overcome

There must be an ethical compass built into the steering of the digital roadmap that the NHS is so set on, towards its digital future.

An ethical compass guiding app consumer regulation, to enable fairness of access and to know when apps are downloaded or digital programmes begun, that users know to what they are signed up.

Fundamental to this the NIB speakers all recognised at #kfdigital15 is the ethical and trustworthy extraction, storage and use of data.

There is opportunity to consider when designing the NHS digital future [as the NIB develops its roadmaps for NHS England]:

i making principled decisions on barriers

ii. pro-actively designing ethics and change into ongoing projects, and,

iii. ensuring engagement is genuine collaboration and co-production.

The barriers do not need got around, but solutions built by design.

***

Part 1. Digital revolution by design: building for change and people

Part 3. Digital revolution by design: building infrastructures

NIB roadmaps: https://www.gov.uk/government/uploads/system/uploads/attachment_data/file/384650/NIB_Report.pdf

![Building Public Trust in care.data datasharing [3]: three steps to begin to build trust](https://jenpersson.com/wp-content/uploads/2015/06/optout_ppt-672x372.jpg)

![Building Public Trust in care.data sharing [1]: Seven step summary to a new approach](https://jenpersson.com/wp-content/uploads/2015/07/edison1-672x372.jpg)