On the 18th February 2014, a six month pause in the rollout of care.data was announced. [1] It’s now September. Six months is up.

When will we find out what concrete improvements have been made? There are open questions on plans for the WHAT of care.data Scope and its future change management, the WHO of Data Access and Sharing and its Opt out management, the HOW of Governance & Oversight, Legislation, and the WHY – Communication of the care.data programme as a whole. And WHEN will any of this happen?

What can happen in six months?

Based on Mo Farah‘s average running speed of 21.8km/hour over The Olympic Games 10,000m gold medal winning performance, and on 12 hours a day, he could have covered about 47,000 km in that time. Once around the world, in those 180 days. With some kilometres spare margin, into the bargain.

That’s perhaps unrealistic in 180 days, but last February promises made to the public, to the Health Select Committee and Parliament were given about data sharing as both realistic, and achievable.

So what about the publicly communicated changes to the care.data rollout in the six month time frame?

The letter from Mr.Kelsey on April 14th, said they would use the six months to listen and act on the views of patients, public, GPs and stakeholders.

I’d like to address some of those views and see how they have been acted on. Here’s the best I have been able to put together of promises made, and the questions I still have, six months on.

Scope. What part of our records is included in care.data?

The truth is this should be the simplest question, but seems the hardest to answer. Scope is elusive, and shifting.

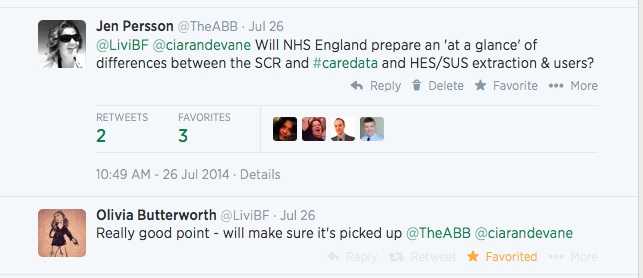

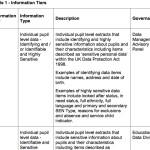

A simple description would help us understand what data will be extracted, shared and for what purpose. The public needs an at-a-glance chart to be properly informed, to distinguish between care.data, the Summary Care Record, HES/SUS and how patient data is used, by whom for what purposes. This will help patients distinguish between direct and indirect care uses. What doctors would use in the GP practice, versus researchers in a lab. It will help set expectations for Patient Online. It could help explain data use in Risk Stratification. [see care.data-info by Dr.Neil Bhatia for high level items in scope, or field name detail here p22 onwards] [11]. This lack of clarity was already identified in April 2013, point 3.3, but nothing done.

Mid-August to further complicate matters, it became apparant from published care.data advisory group minutes, that the content scope is under review and may now include sensitive data. This was met with serious concern in many quarters, not least HIV support groups, on broadening the scope of care.data extraction and access. I realised I wasn’t in the least surprised, but continue to be shocked by the disconnect between project leadership and the public.

Are the listening exercises a complete waste of time?

If people aren’t comfortable sharing basic health records, how will suggesting they share anything more sensitive be likely to encourage participation?

[The scope of how our GP part of care.data will be used is also under consideration for expansion to research – more in part two, on that.]

Scope is undefined. It will continue to ever expand as the replacement for SUS. In April, I wrote down my concerns at that time. Most of which remain unchanged.

Stephen Dorrell, MP on the 11th March in Parliament summed up nicely, why this move now to shift scope is ludicrous. If we do not have stability of scope, we cannot know to what we are consenting. This is the foundation of our patient trust.

Mr Dorrell: I am not going to comment on whether the free text data should or should not be part of the system, or on whether the safeguards are adequate. However, I agree with the hon. Lady absolutely that the one sure way of undermining public confidence in safeguards is to change those safeguards every five minutes according to whichever witness we are listening to.

If the Patients & Information Directorate at NHS England is serious about transparency, then we should be clear about all our patient data, where it comes from, where it goes to, who accesses it and why.

Data protection principle 3 requires that the minimum possible data required is extracted, not excessive. Is this being simply ignored, as inconvenient in a project which intends scope to ever accumulate as SUS replacement?

“Will NHS England prepare an at-a-glance of differences between SCR and care.data, and HES/SUS extractions and users?”

Conclusion on Scope & its Communications:

This scope clarification alone would be I believe, if well done, one of the most effective communications tools for patients to make an informed choice.

1. We need to know what parts of our personal, confidential records, sensitive or otherwise are to be extracted now.

2. How will we be informed if that scope changes in future?

3. What do we do, if we object to any of those items being included?

Before any launch of pilot or otherwise, a proper plan to ensure informed communication and choice, today and looking to future scope changes, must be clear for everyone.

What’s happened since February to the verbal agreements and promises that were made back then?

Whether in Parliament by Dan Poulter and the Secretary of State Mr.Hunt, in Select Committee Hearings, by the Patients & Information Directorate at NHS England and in patient facing hour at the mixed-subject Open Day, promises have been made, but what evidence has the public, that they are real? There has been little public communication since then.

I have read, watched or attended NHS England Board meetings, Health Select committee meetings, and read the press, media releases and social media. I’ve been to a general NHS Open Day, listened in to NHS England online events, the first HSCIC Partridge Review follow up event, and spoken to patients, public and charity groups. Had I not, I would know nothing more than I did in February which was, that something had been put on hold, about which I should have, but hadn’t, received a doordrop leaflet.

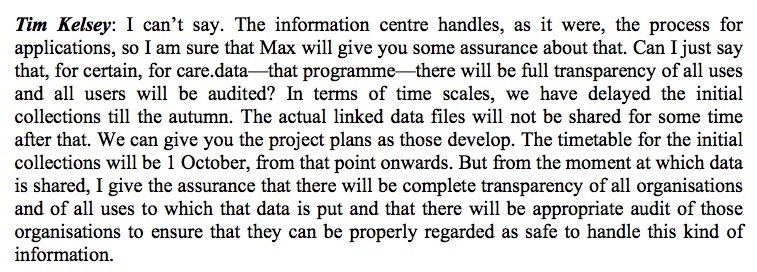

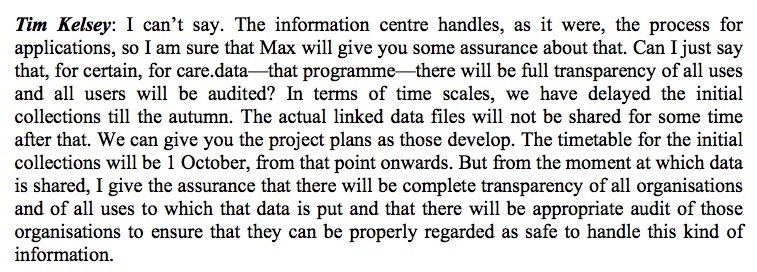

Pilot practices ‘pathfinders’ we were told will trial the extraction, in six months, then in autumn, or October 1st according to Mr.Kelsey at the Health Select Committee (extract below).

I’ve not seen anywhere yet, where these practices will be, nor that patients have been informed. The latest status I read was on EHI. In response to this lack of information, medConfidential wrote to Healthwatches and CCGs with important questions and ideas. [Well worth a read].

Scope of Access – Who will get our records and for what?

Where and to whom may our data be transferred?

As part of the what of scope, we also need clarification on the who will be in scope in which countries to access data.

“Can I confirm now, that the data connected to care.data will not be allowed outside the United Kingdom? Let me confirm that before we have further hares running.” Tim Kelsey, said at the Health Select Committee.

Since GP care.data is to be connected with HES data, and data may be linked via the Data Access Request Service (the recently renamed former HSCIC Data Linkage Service DLES) on demand;

Q. How will I know in future that there are no plans to release my data outside the UK and EU, as HES has been in the past?

As far as I have read, geographical scope is not legislated for. I would like to be pointed to this if it is.

From the Health Select Committee: Committee Room 15 : Meeting started on Tuesday 25 February at 2.29pm – Ended at 5.20pm

Mr. Tim Kelsey, National Director for Patients and Information stated: The pause was announced, precisely to address the issues.

“People are concerned about the purpose to what their data is being put.”

It’s not yet been addressed. Neither for the now, nor the future.

We need to have a robust mechanism in place for all future scope of use changes. If today I agree to have some of my data extracted used for public health research for the public good, I don’t want to find that I’ve had all my personal details including my genomic records [which personally are somewhere in my record already] spliced with Dolly the sheep research, in the hunt for a cure for arthritis five years down the line, and there’s another me living at the Roslin Institute. [I jest to exaggerate the point, not all research definitions are equal]. A yes today, cannot mean a yes for anything and everything.

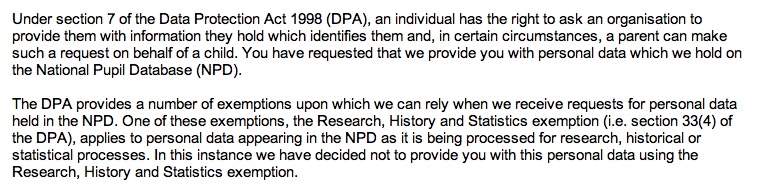

The opt out term at present only allows a later ‘opt out’ to mean that data is made less identifying ‘pseudonymous’ from that request date, nothing deleted. ‘Opt out’, is not ‘get out’.

The records from before that request date, will remain clear and fully identifying for all time. So if a company requests an historical report, will our identifiable data still be included in it?

Opt out is not as simple as it sounds.

OPT OUT

The whole issue of opt out was at best an inaccurately communicated process. I believe it was misleading.

What is still wrong to my mind with this mechanism, is that there appears to be the assumption that all data may be matched and de-identified before release. That corresponds to the September 2013 NHS England Directions led by Mr. Kelsey to HSCIC saying there is “ “no need” to take into account individual objection to pseudonymous data sharing “. [2] And the patient leaflet, which was produced before any opt out changes, which stated we could object to ‘identifiable’ data sharing. That ‘identifiable’ doesn’t include all our data.

I’d like to see that clarified. Because Mr.Hunt has promised an opt out in entirety:

25th February in Parliament:

Mr.Hunt: …”we said that if we are going to use anonymised data for the benefit of scientific discovery in the NHS, people should have the right to opt out. We introduced that right and sent a leaflet to every house in the country, and it is important that we have the debate..”

“the reason why we are having the debate is that this Government decided that people should be able to opt out from having their anonymised data used for the purposes of scientific research”

Dr Julian Huppert (Cambridge) (LD): There are of course huge benefits from using properly anonymised data for research, but it is difficult to anonymise the data properly and, given how the scheme has progressed so far, there is a huge risk to public confidence. Will the Secretary of State use the current pause to work with the Information Commissioner to ensure that the data are properly anonymised and that people can have confidence in how their data will be used and how they can opt out?

Hunt: “I will do that, and NHS England was absolutely right to have a pause so that we ensure that we give people such reassurance…”

Status: the public still has no communication about any opt outs on offer or a consistent, effectively communicated method by which to request it.

Our data continues to be released regardless.

What I want to understand on opt out:

1. Can I choose to have my data used for only care, or for bona fide public health research, but not, for example, other types, such as commercial pharma marketing or data intermediaries?

2. Can I restrict the use of all my children’s data, to include all of it, including fully ‘anonymous’ data as the Secretary of State stated? Not only restricting red and amber, but all data sharing?

3. How will patients know that all of their medical data is covered by these options, not only our GP records? (For other data held see > http://www.hscic.gov.uk/datasets)

4. Will NHS staff be given the right to opt out to prevent their personal confidential data or employment data being shared as part of the workforce data set?

5. Does opt out really mean opt out – when will we see the revised definition?

6. How will objection management (storing our opt out decision) be implemented with other data sharing? (SCR, Electronic Prescription Service, OOH access, Proactive care at local level.)

7. How will objection be effectively communicated and measured?

8. Will the BMA vote [3] be ignored by the Patients & Information Directorate at NHS England? They called for an opt in system? And also for it to have the option to be used only for improving care, not commercial exploitation. They appreciate the risks of losing patient confidentiality and trust.

9. Will the views of Dr. Mike Bewick, deputy medical director at NHS England, also be ignored, who said parts (referring to commercial use) should be ‘opt-in’ only? [Pulse, June 2014]

10. What will ensure opt out remains more than just Mr.Hunt’s word, if it has no legislative backing?

The opt out on offer at Christmas was to restrict identifiable data sharing. There was “no need” to take into account individual objection to pseudonymous data sharing said the September 13th NHS England directions. Those NHS England Board directions from September and December 2013 are now possibly out of date, but I’d like to see new ones which replaced them, to reassure me that an opt out that we are offered, works the way I would expect.

Most importantly for me, will the opt out be given more legislative weight, Q.10? Today I have only the Secretary of State’s word that any “objection will be respected.” And as we all know, post holders come and go, a spoken agreement by one person, may not be respected by another.

**********

ACCESS

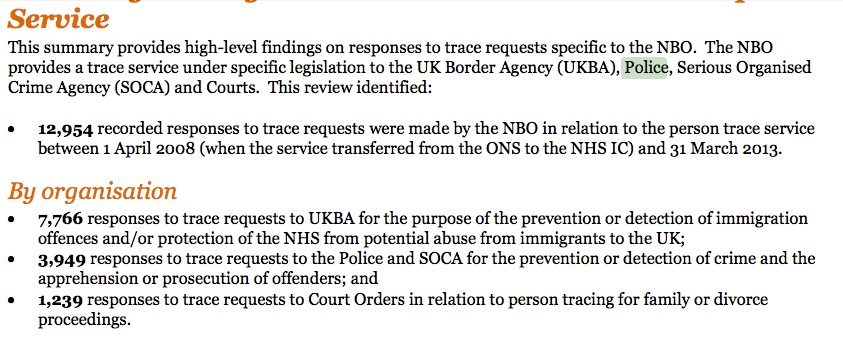

Many of the concerns around which organisations will have access to our medical records, and which were somewhat dismissed on Newsnight then, have been shown to have been legitimate concerns since:

“Access by police, sold to insurance companies, sold for commercial purposes” Newsnight, February 19th 2014

… all shown to be users of existing medical records held by the HSCIC through the

Partridge Review.Which other concerns over access were raised and have they been addressed?

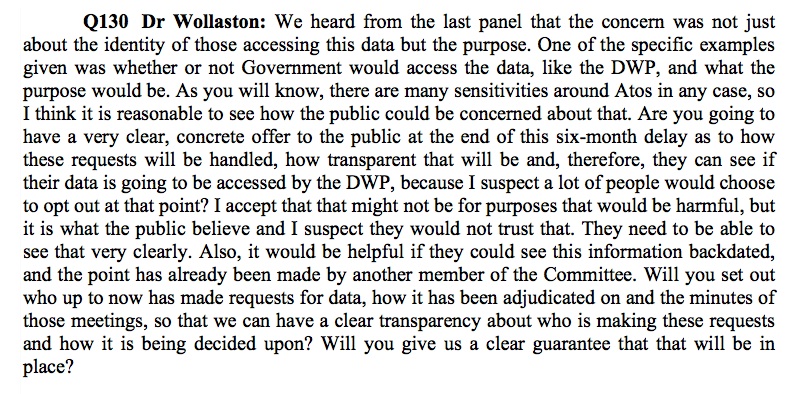

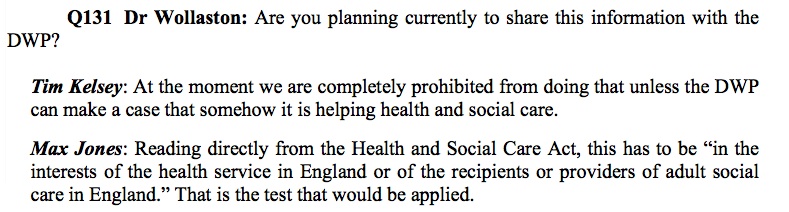

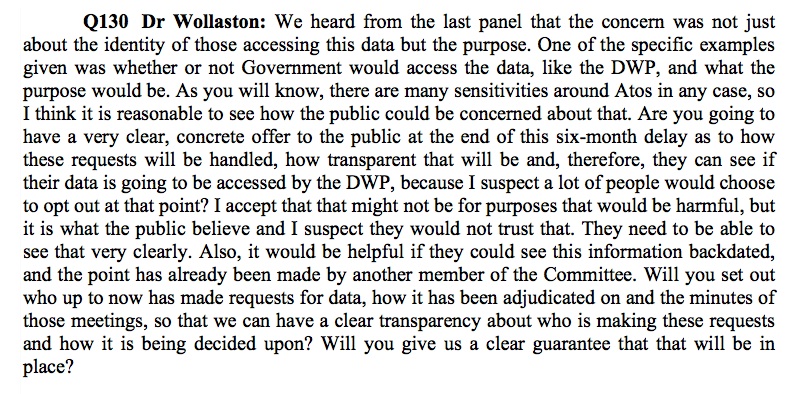

Dr. Sarah Wollaston MP, then member, now Chair, of the Health Select Committee raised the concerns of many when she asked whether other Government Departments may share care.data. Specifically she asked Mr.Kelsey,

“are you going to have a clear concrete offer to the public at the end of the six-month delay as to how these requests will be handled […] see if their data is going to be accessed by DWP […]?”

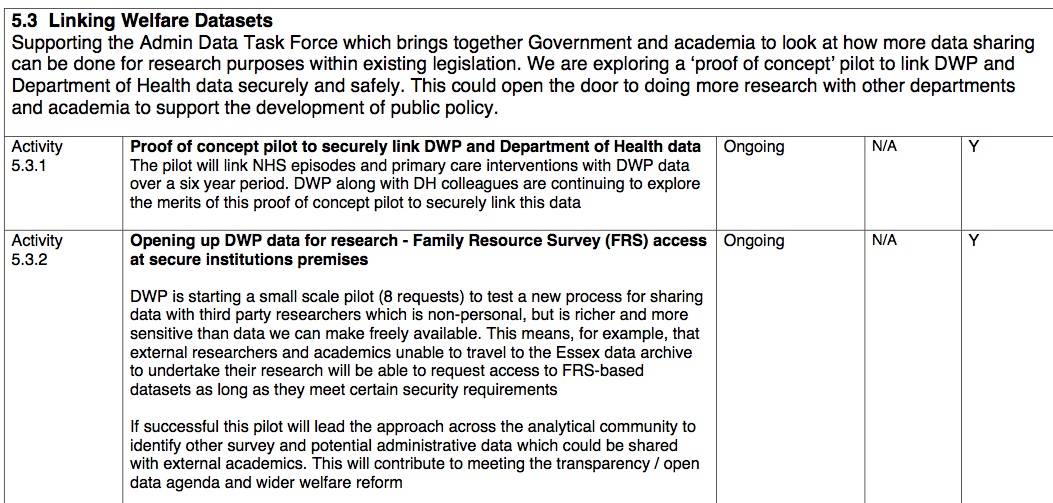

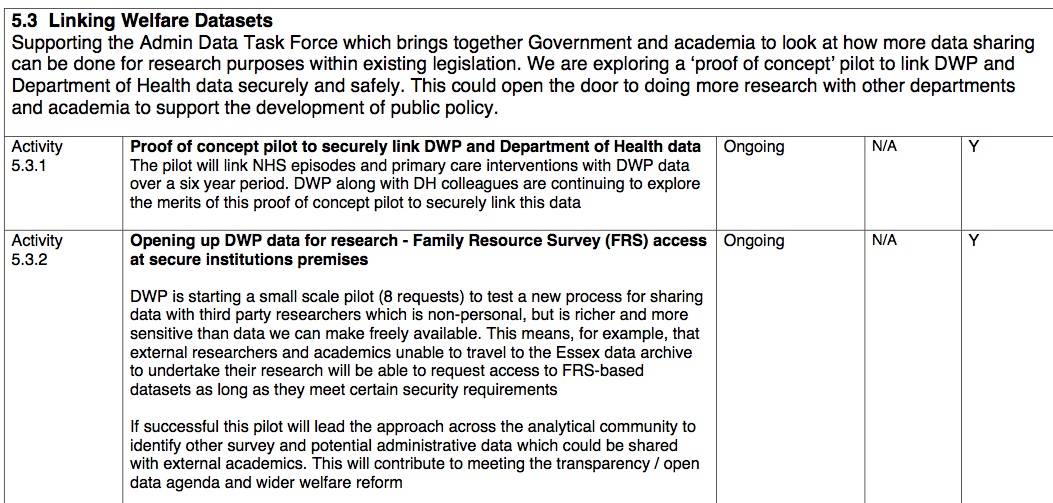

I believe this is still more than a very valid and open question, particularly with reference to the December 2013 Admin Data Task Force which was exploring a ‘proof of concept’ to link DWP [6] and Department of Health data:

“Primary and Secondary Care interventions with DWP over a six year period.”

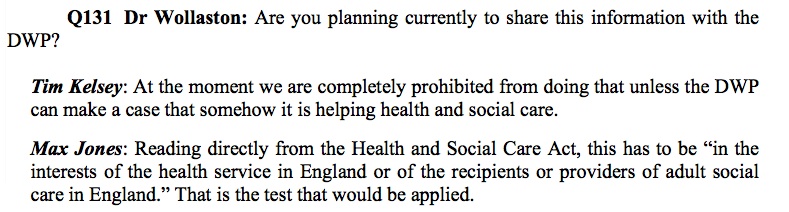

At the Health Select Committee evidence session, Mr. Kelsey and Mr. Jones did not give a straight yes/no answer to the question.

Personally I believe it would be clearly possible that DWP administering social care or welfare payments will make a case under ‘health and social care’. Unless I see it in legislation that DWP will not have access care.data or other HSCIC held data, I personally will assume that it is going to, and may have already especially given the ‘primary and secondary linking’ pilot listed above.

What about other government departments access to health data?

A group met for the event ‘Sharing Government Administrative Data: new research opportunities’: strategic meeting on 14 July 2014, at the Wellcome Trust, London [4] – at which both care.data and DWP data had their own agenda slots.

The DWP holds other departments’ data and is “open to acting a hub.” July 2014 [7]

The Cabinet Office presenter included suggestions UK legislation [9] may change to enable all departments (excluding NHS) to share data, and the ADT recommended that new ‘Data Sharing” legislation should be put forward in the next [Parliamentary] term.

1. Since HSCIC is an ALB and not NHS, are they included in this plan to broaden sharing across government departments?

2. Will the care.data addendum of September 2013 be amended to show the public that those listed then, are no longer considered appropriate users?

3. Will Mr.Kelsey now be able to answer Dr.Wollaston MP’s question regards DWP with a yes / no answer?

Think tanks, intermediaries and for the purposes of actuarial refinement were included in documents at the time, which suggested that DAAG alone in future, would review applications.

The DAAG is still called the DAAG and appears to have gone from 4 to 6 members. The Data Access Advisory Group (DAAG), hosted by the Health and Social Care Information Centre (HSCIC), considers applications for sensitive data made to the HSCIC’s Data Access Request Service.

Three key issues remain unclear to me on recent Data Release governance at DAAG:

1. Free text access and 2. Commercial use 3. Third Party use

The July 2014 DAAG approved free text release of data for CSUs on a conditional cleansed basis, and for Civil Eyes with a caveat letter to say it shouldn’t be used for any ‘additional commercial use.’ It either is or isn’t commercial I think this is fudging the edges of purpose and commercial use, and precisely why the lack of defined scope use undermines trust that data will be used only for proper purposes and in the definition of the Care Act.

Free text is a concern raised on a number of occasions in Parliament and Health Select Committee. On the HSCIC website it says, none will be collected in future for care.data. How is it now approved for release, if it has not already been collected in the past – in HES? So it would appear, free text has already been extracted and is being released. How are we to trust it will not be the case for care.data?

****

In summary: after six months pause, it remains unclear what exactly is in scope, to whom will it be released. We are still not entirely clear who will have access to what data, and why.

In part two I’ll look in brief at what legislative changes, both in the UK and wider EU may influence care.data and wider health data sharing. Plus some status updates on Research seeking approval, Changes to Oversight & Governance and Communications.

But to wrap up here, the care.data question asked in Leicestershire on the NHS England Open Day on June 17th, still sums up for me, much public feeling:

“Are we saying there will be only clinical use of the data – no marketing, no insurance, no profit making? This is our data.”

That commercial use, the concept that you are exploiting the knowledge of our vulnerability or illness, in commercial data mining, is still the largest open question, and largest barrier to public support I foresee. ‘Will the Care Act really help us with that?’ I ask in my next post.

MedConfidential have released their technical recommendations on safe settings access to data. Their analogy struck me again, as to how important it is that the use of data is seen by the users, as a collective.

Any pollution in the collective pool, will contaminate the data flow for all.

I believe the HSCIC, NHS England Patients & Information Directorate, the Department of Health need to accept that the continued access to patient data by commercial data intermediaries is going to do that. Either those users, some of whom are young and inexperienced commercial companies, need to be excluded, or to be permitted very stringent uses of data without commercial re-use licenses.

The commercial intermediaries still need to be told, don’t pee in the pool. It spoils it, for everyone else.

I’ll leave you with a thought on that, from Martin Collignon, Industry Analyst at Google.

**********

For part two, follow link >>here>> I share my thoughts on current status of the HOW of Governance & Oversight, Legislation, and the WHY – addressing Communication of the care.data programme as a whole. And WHEN will any of this happen?

Key refs:

[1]. Second delay to care.data rollout announced – The Guardian February 18th 2014: http://www.theguardian.com/society/2014/feb/18/nhs-delays-sharing-medical-records-care-data

[2] NHS England directions to HSCIC September 13th 2013: http://www.england.nhs.uk/wp-content/uploads/2013/09/item_5.pdf

[3] BMA vote for opt In system: http://www.bmj.com/content/348/bmj.g4284

[4] July 14th at Wellcome Trust event ‘Sharing Government Administrative Data: new research opportunities’:

[5] EU Data Legislation http://www.esrc.ac.uk/_images/presentation%208_Beth%20Thompson%20Wellcome%20Trust_tcm8-31281.pdf

[6] DWP data linkage proof of concept trial 6 year period of primary and secondary data, December 2013

[7] Developments in Access to DWP data 2014

[8] NHS data sharing – Dr.Lewis care.data July 2014 presentation

[9] Possible UK Legislation http://www.esrc.ac.uk/_images/Presentation_7_Rufus_Rottenberg_tcm8-31280.pdf

[10] Progress of the changes to be made at HSCIC recommendations of the Partridge Review https://medconfidential.org/wp-content/uploads/hscic/20140903-board/HSCIC140604di_Progress_on_Partridge_review.pdf

[11] Scope list p22 onwards: http://www.england.nhs.uk/wp-content/uploads/2013/08/cd-ces-tech-spec.pdf

[12] Health and Social Care Transparency Panel April 2013 minutes https://www.gov.uk/government/uploads/system/uploads/attachment_data/file/259828/HSCTP_13-1-mins_23_Apr_13__NewTemp_.pdf

![Care.data – my six month pause, anniversary round up [Part 1]](https://jenpersson.com/wp-content/uploads/2014/08/gps_controller-672x245.jpg)