Andy Williams said* that he wants not evolution, but a revolution in digital health.

It strikes me that few revolutions have been led top down.

We expect revolution from grass roots dissent, after a growing consensus in the population that the status quo is no longer acceptable.

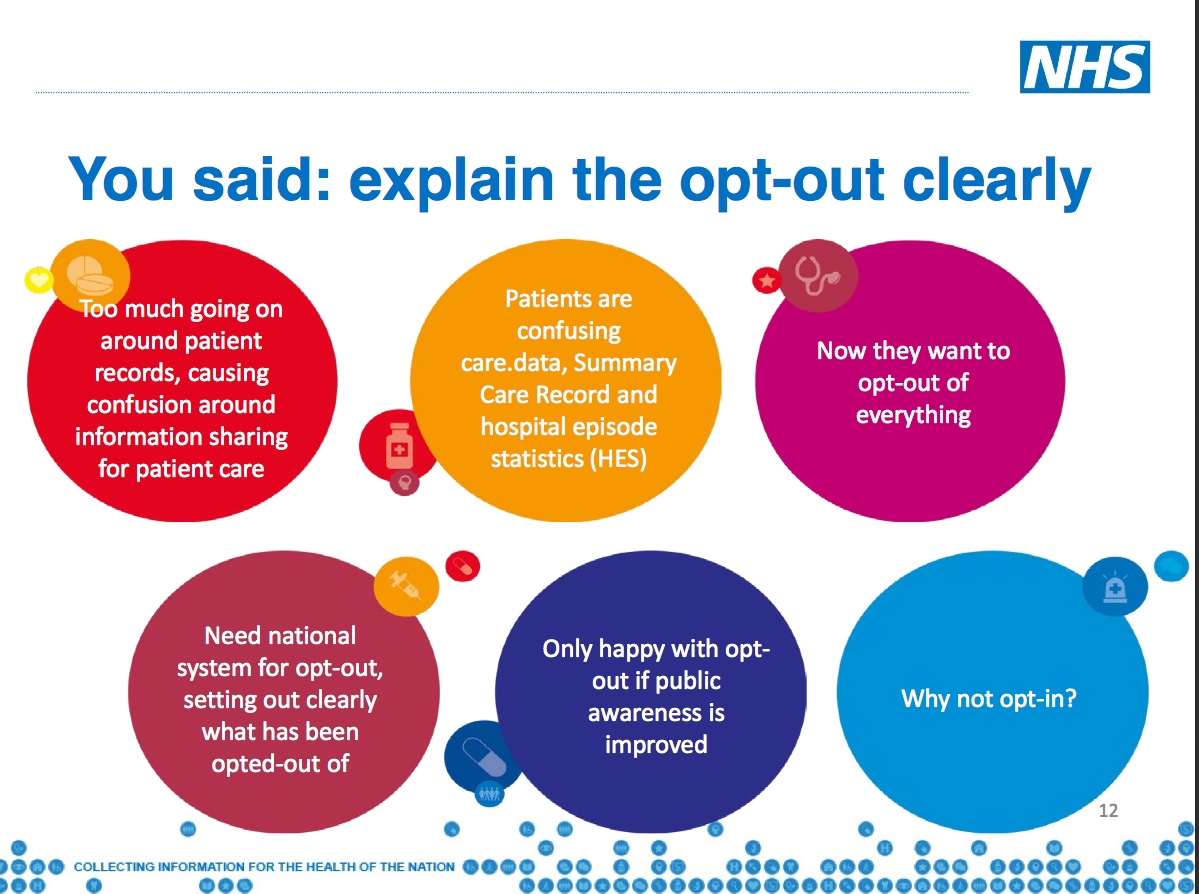

As the public discourse over the last 18 months about the NHS use of patient data has proven, we lack a consensual agreement between state, organisations and the public how the data in our digital lives should be collected, used and shared.

The 1789 Declaration of the Rights of Man and Citizen as part of the French Revolution set out a charter for citizens, an ethical and fair framework of law in which they trusted their rights would be respected by fellow men.

That is something we need in this digital revolution.

We are told hand by government that it is necessary to share all our individual level data in health from all sorts of sources.

And that bulk data collection is vital in the public interest to find surveillance knowledge that government agencies want.

At the same time other government departments plan to restrict citizens’ freedom of access to knowledge that could be used to hold the same government and civil servants to account.

On the consumer side, there is public concern about the way we are followed around on the web by companies including global platforms like Google and Facebook, that track our digital footprint to deliver advertising.

There is growing objection to the ways in which companies scoop up data to build profiles of individuals and groups and personalising how they get treated. Recent objection was to marketing misuse by charities.

There is little broad understanding yet of the power of insight that organisations can now have to track and profile due to the power of algorithms and processing capability.

Technology progress that has left legislation behind.

But whenever you talk to people about data there are two common threads.

The first, is that although the public is not happy with the status quo of how paternalistic organisations or consumer companies ‘we can’t live without’ manage our data, there is a feeling of powerlessness that it can’t change.

The second, is frustration with organisations that show little regard for public opinion.

What happens when these feelings both reach tipping point?

If Marie Antoinette were involved in today’s debate about the digital revolution I suspect she may be the one saying: “let them eat cookies.”

And we all know how that ended.

If there is to be a digital revolution in the NHS where will it start?

There were marvelous projects going on at grassroots discussed over the two days: bringing the elderly online and connected and in housing and deprivation. Young patients with rare diseases are designing apps and materials to help consultants improve communications with patients.

The NIB meeting didn’t have real public interaction and or any discussion of those projects ‘in the room’ in the 10 minutes offered. Considering the wealth of hands-on digital health and care experience in the audience it was a missed opportunity for the NIB to hear common issues and listen to suggestions for co-designed solutions.

While white middle class men (for the most part) tell people of their grand plans from the top down, the revolutionaries of all kinds are quietly getting on with action on the ground.

If a digital revolution is core to the NHS future, then we need to ask to understand the intended change and outcome much more simply and precisely.

We should all understand why the NHS England leadership wants to drive change, and be given proper opportunity to question it, if we are to collaborate in its achievement.

It’s about the people, stoopid

Passive participation will not be enough from the general public if the revolution is to be as dramatic as it is painted.

Consensual co-design of plans and co-writing policy are proven ways to increase commitment to change.

Evidence suggests citizen involvement in planning is more likely to deliver success. Change done with, not to.

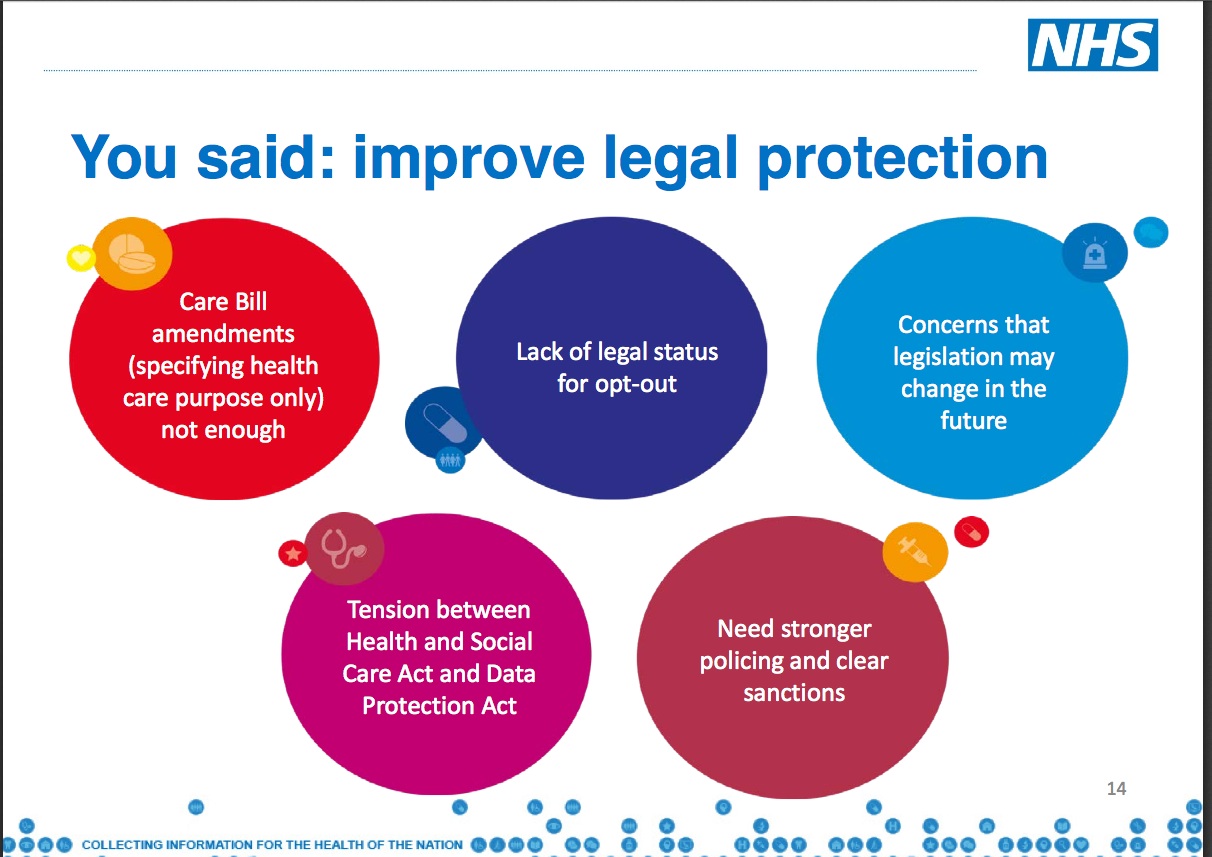

When constructive solutions have been offered what impact has engagement if no change is made to any plans?

If that’s engagement, you’re doing it wrong.

Struggling with getting themselves together on the current design for now, it may be hard to invite public feedback on the future.

But it’s only made hard if what the public wants is ignored. If those issues were resolved in the way the public asked for at listening events it could be quite simple to solve.

The NIB leadership clearly felt nervous to have debate, giving only 10 minutes of three hours for public involvement, yet that is what it needs. Questions and criticism are not something to be scared of, but opportunities to make things better.

The NHS top-down digital plans need public debate and dissected by the clinical professions to see if it fits the current and future model of healthcare, because if not involved in the change, the ride will be awfully bumpy to get there.

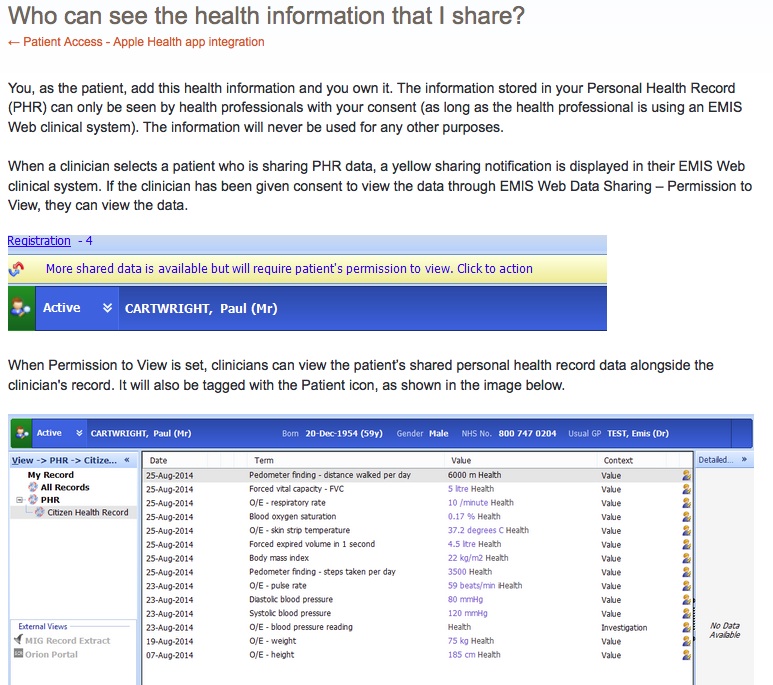

For data about us, to be used without us, is certainly an outdated model incompatible with a digital future.

The public needs to fight for citizen rights in a new social charter that demands change along lines we want, change that doesn’t just talk of co-design but that actually means it.

If unhappy about today’s data use, then the general public has to stop being content to be passive cash cows as we are data mined.

If we want data used only for public benefit research and not market segmentation, then we need to speak up. To the Information Commissioner’s Office if the organisation itself will not help.

“As Nicole Wong, who was one of President Obama’s top technology advisors, recently wrote, “[t]here is no future in which less data is collected and used.”

“The challenge lies in taking full advantage of the benefits that the Internet of Things promises while appropriately protecting consumers’ privacy, and ensuring that consumers are treated fairly.” Julie Brill, FTC, May 4 2015, Berlin

In the rush to embrace the ‘Internet of Things’ it can feel as though the reason for creating them has been forgotten. If the Internet serves things, it serves consumerism. AI must tread an enlightened path here. If the things are designed to serve people, then we would hope they offer methods of enhancing our life experience.

In the dream of turning a “tsunami of data” into a “tsunami of actionable business intelligence,” it seems all too often the person providing the data is forgotten.

While the Patient and Information Directorate, NHS England or NIB speakers may say these projects are complex and hard to communicate the benefits, I’d say if you can’t communicate the benefits, its not the fault of the audience.

People shouldn’t have to either a) spend immeasurable hours of their personal time, understanding how these projects work that want their personal data, or b) put up with being ignorant.

We should be able to fully question why it is needed and get a transparent and complete explanation. We should have fully accountable business plans and scrutiny of tangible and intangible benefits in public, before projects launch based on public buy-in which may misplaced. We should expect plans to be accessible to everyone and make documents straightforward enough to be so.

Even after listening to a number of these meetings and board meetings, I am not sure many would be able to put succinctly: what is the NHS digital forward view really? How is it to be funded?

On the one hand new plans are to bring salvation, while the other stops funding what works already today.

Although the volume of activity planned is vast, what it boils down to, is what is visionary and achievable, and not just a vision.

Digital revolution by design: building for change and people

We have opportunity to build well now, avoiding barriers-by-design, pro-actively designing ethics and change into projects, and to ensure it is collaborative.

Change projects must map out their planned effects on people before implementing technology. For the NHS that’s staff and public.

The digital revolution must ensure the fair and ethical use of the big data that will flow for direct care and secondary uses if it is to succeed.

It must also look beyond its own bubble of development as they shape their plans in the ever changing infrastructures in which data, digital, AI and ethics will become important to discuss together.

That includes in medicine.

Design for the ethics of the future, and enable change mechanisms in today’s projects that will cope with shifting public acceptance, because that shift has already begun.

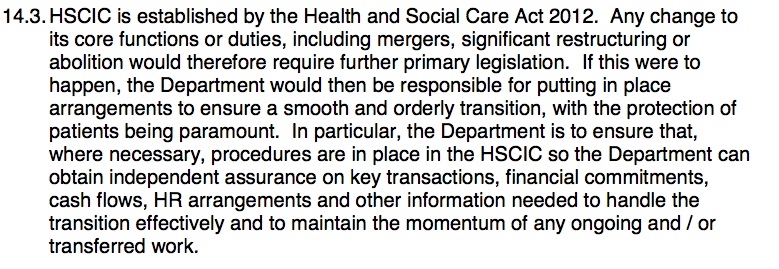

Projects whose ethics and infrastructures of governance were designed years ago, have been overtaken in the digital revolution.

Projects with an old style understanding of engagement are not fit-for-the-future. As Simon Denegri wrote, we could have 5 years to get a new social charter and engagement revolutionised.

Tim Berners-Lee when he called for a Magna Carta on the Internet asked for help to achieve the web he wants:

“do me a favour. Fight for it for me.”

The charter as part of the French Revolution set out a clear, understandable, ethical and fair framework of law in which they trusted their rights would be respected by fellow citizens.

We need one for data in this digital age. The NHS could be a good place to start.

****

It’s exciting hearing about the great things happening at grassroots. And incredibly frustrating to then see barriers to them being built top down. More on that shortly, on the barriers of cost, culture and geography.

****

* at the NIB meeting held on the final afternoon of the Digital Conference on Health & Social Care at the King’s Fund, June 16-17.

NEXT>>

2. Driving Digital Health: revolution by design

3. Digital revolution by design: building infrastructure

Refs:

Apps for sale on the NHS website

Whose smart city? Resident involvement

Data Protection and the Internet of Things, Julie Brill FTC

A Magna Carta for the web

![The Economic Value of Data vs the Public Good? [3] The value of public voice.](https://jenpersson.com/wp-content/uploads/2015/03/Trust-e1426443553441-672x372.jpg)

![Wearables: patients will ‘essentially manage their data as they wish’. What will this mean for diagnostics, treatment and research and why should we care? [#NHSWDP 3]](https://jenpersson.com/wp-content/uploads/2015/03/FullSizeRender1-672x372.jpg)

![smartphones: the single most important health treatment & diagnostic tool at our disposal [#NHSWDP 2]](https://jenpersson.com/wp-content/uploads/2015/03/IMG_4563-e1427130930127-672x372.jpg)