“Lawmaking is the Wire, not Schoolhouse Rock. It’s about blood and war and power, not evidence and argument and policy.”

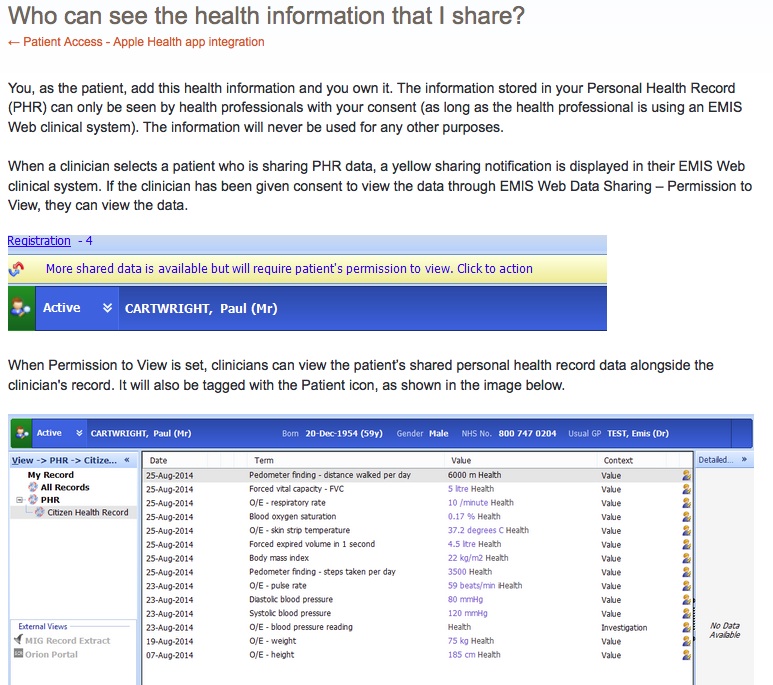

"We can't trust the regulators," they say. "We need to be able to investigate the data for ourselves." Technology seems to provide the perfect solution. Just put it all online - people can go through the data while trusting no one. There's just one problem. If you can't trust the regulators, what makes you think you can trust the data?"

Extracts from The Boy Who Could Change the World: The Writings of Aaron Swartz. Chapter: ‘When is Technology Useful? ‘ June 2009.

The question keeps getting asked, is the concept of ethics obsolete in Big Data?

I’ve come to some conclusions why ‘Big Data’ use keeps pushing the boundaries of what many people find acceptable, and yet the people doing the research, the regulators and lawmakers often express surprise at negative reactions. Some even express disdain for public opinion, dismissing it as ignorant, not ‘understanding the benefits’, yet to be convinced. I’ve decided why I think what is considered ‘ethical’ in data science does not meet public expectation.

It’s not about people.

Researchers using large datasets, often have a foundation in data science, applied computing, maths, and don’t see data as people. It’s only data. Creating patterns, correlations, and analysis of individual level data are not seen as research involving human subjects.

This is embodied in the nth number of research ethics reviews I have read in the last year in which the question is asked, does the research involve people? The answer given is invariably ‘no’.

And these data analysts using, let’s say health data, are not working in a subject that is founded on any ethical principle, contrasting with the medical world the data come from.

The public feels differently about the information that is about them, and may be known, only to them or select professionals. The values that we as the public attach to our data and expectations of its handling may reflect the expectation we have of handling of us as people who are connected to it. We see our data as all about us.

The values that are therefore put on data, and on how it can and should be used, can be at odds with one another, the public perception is not reciprocated by the researchers. This may be especially true if researchers are using data which has been de-identified, although it may not be anonymous.

New legislation on the horizon, the Better Use of Data in Government, intends to fill the [loop]hole between what was legal to share in the past and what some want to exploit today, and emphasises a gap in the uses of data by public interest, academic researchers, and uses by government actors. The first incorporate by-and-large privacy and anonymisation techniques by design, versus the second designed for applied use of identifiable data.

Government departments and public bodies want to identify and track people who are somehow misaligned with the values of the system; either through fraud, debt, Troubled Families, or owing Student Loans. All highly sensitive subjects. But their ethical data science framework will not treat them as individuals, but only as data subjects. Or as groups who share certain characteristics.

The system again intrinsically fails to see these uses of data as being about individuals, but sees them as categories of people – “fraud” “debt” “Troubled families.” It is designed to profile people.

Services that weren’t built for people, but for government processes, result in datasets used in research, that aren’t well designed for research. So we now see attempts to shoehorn historical practices into data use by modern data science practitioners, with policy that is shortsighted.

We can’t afford for these things to be so off axis, if civil service thinking is exploring “potential game-changers such as virtual reality for citizens in the autism spectrum, biometrics to reduce fraud, and data science and machine-learning to automate decisions.”

In an organisation such as DWP this must be really well designed since “the scale at which we operate is unprecedented: with 800 locations and 85,000 colleagues, we’re larger than most retail operations.”

The power to affect individual lives through poor technology is vast and some impacts seem to be being badly ignored. The ‘‘real time earnings’ database improved accuracy of benefit payments was widely agreed to have been harmful to some individuals through the Universal Credit scheme, with delayed payments meaning families at foodbanks, and contributing to worse.

“We believe execution is the major job of every business leader,” perhaps not the best wording in on DWP data uses.

What accountability will be built-by design?

I’ve been thinking recently about drawing a social ecological model of personal data empowerment or control. Thinking about visualisation of wants, gaps and consent models, to show rather than tell policy makers where these gaps exist in public perception and expectations, policy and practice. If anyone knows of one on data, please shout. I think it might be helpful.

But the data *is* all about people

Regardless whether they are in front of you or numbers on a screen, big or small datasets using data about real lives are data about people. And that triggers a need to treat the data with an ethical approach as you would people involved face-to-face.

Researchers need to stop treating data about people as meaningless data because that’s not how people think about their own data being used. Not only that, but if the whole point of your big data research is to have impact, your data outcomes, will change lives.

Tosh, I know some say. But, I have argued, the reason being is that the applications of the data science/ research/ policy findings / impact of immigration in education review / [insert purposes of the data user’s choosing] are designed to have impact on people. Often the people about whom the research is done without their knowledge or consent. And while most people say that is OK, where it’s public interest research, the possibilities are outstripping what the public has expressed as acceptable, and few seem to care.

Evidence from public engagement and ethics all say, hidden pigeon-holing, profiling, is unacceptable. Data Protection law has special requirements for it, on autonomous decisions. ‘Profiling’ is now clearly defined under article 4 of the GDPR as ” any form of automated processing of personal data consisting of using those data to evaluate certain personal aspects relating to a natural person, in particular to analyse or predict aspects concerning that natural person’s performance at work, economic situation, health, personal preferences, interests, reliability, behaviour, location or movements.”

Using big datasets for research that ‘isn’t interested in individuals’ may still intend to create results profiling groups for applied policing, or discriminate, to make knowledge available by location. The data may have been deidentified, but in application becomes no longer anonymous.

Big Data research that results in profiling groups with the intent for applied health policy impacts for good, may by the very point of research, with the intent of improving a particular ethnic minority access to services, for example.

Then look at the voting process changes in North Carolina and see how that same data, the same research knowledge might be applied to exclude, to restrict rights, and to disempower.

Is it possible to have ethical oversight that can protect good data use and protect people’s rights if they conflict with the policy purposes?

The “clear legal basis”is not enough for public trust

Data use can be legal and can still be unethical, harmful and shortsighted in many ways, for both the impacts on research – in terms of withholding data and falsifying data and avoiding the system to avoid giving in data – and the lives it will touch.

What education has to learn from health is whether it will permit the uses by ‘others’ outside education to jeopardise the collection of school data intended in the best interests of children, not the system. In England it must start to analyse what is needed vs wanted. What is necessary and proportionate and justifies maintaining named data indefinitely, exposed to changing scope.

In health, the most recent Caldicott review suggests scope change by design – that is a red line for many: “For that reason the Review recommends that, in due course, the opt-out should not apply to all flows of information into the HSCIC. This requires careful consideration with the primary care community.”

The community spoke out already, and strongly in Spring and Summer 2014 that there must be an absolute right to confidentiality to protect patients’ trust in the system. Scope that ‘sounds’ like it might sneakily change in future, will be a death knell to public interest research, because repeated trust erosion will be fatal.

Laws change to allow scope change without informing people whose data are being used for different purposes

Regulators must be seen to be trusted, if the data they regulate is to be trustworthy. Laws and regulators that plan scope for the future watering down of public protection, water down public trust from today. Unethical policy and practice, will not be saved by pseudo-data-science ethics.

Will those decisions in private political rooms be worth the public cost to research, to policy, and to the lives it will ultimately affect?

What happens when the ethical black holes in policy, lawmaking and practice collide?

At the last UK HealthCamp towards the end of the day, when we discussed the hard things, the topic inevitably moved swiftly to consent, to building big databases, public perception, and why anyone would think there is potential for abuse, when clearly the intended use is good.

The answer came back from one of the participants, “OK now it’s the time to say. Because, Nazis.” Meaning, let’s learn from history.

Given the state of UK politics, Go Home van policies, restaurant raids, the possibility of Trump getting access to UK sensitive data of all sorts from across the Atlantic, given recent policy effects on the rights of the disabled and others, I wonder if we would hear the gentle laughter in the room in answer to the same question today.

With what is reported as Whitehall’s digital leadership sharp change today, the future of digital in government services and policy and lawmaking does indeed seem to be more “about blood and war and power,” than “evidence and argument and policy“.

The concept of ethics in datasharing using public data in the UK is far from becoming obsolete. It has yet to begin.

We have ethical black holes in big data research, in big data policy, and big data practices in England. The conflicts between public interest research and government uses of population wide datasets, how the public perceive the use of our data and how they are used, gaps and tensions in policy and practice are there.

We are simply waiting for the Big Bang. Whether it will be creative, or destructive we are yet to feel.

*****

image credit: LIGO – graphical visualisation of black holes on the discovery of gravitational waves

References:

Report: Caldicott review – National Data Guardian for Health and Care Review of Data Security, Consent and Opt-Outs 2016

Report: The One–Way Mirror: Public attitudes to commercial access to health data

Royal Statistical Society Survey carried out by Ipsos MORI: The Data Trust Deficit

![smartphones: the single most important health treatment & diagnostic tool at our disposal [#NHSWDP 2]](https://jenpersson.com/wp-content/uploads/2015/03/IMG_4563-e1427130930127-672x372.jpg)