“Whatever the social issue we want to grasp – the answer should always begin with family.”

Not my words, but David Cameron’s. Just five years ago, Conservative policy was all about “putting families at the centre of domestic policy-making.”

Debate on the Online Harms White Paper, thanks in part to media framing of its own departmental making, is almost all about children. But I struggle with the debate that leaves out our role as parents almost entirely, other than as bereft or helpless victims ourselves.

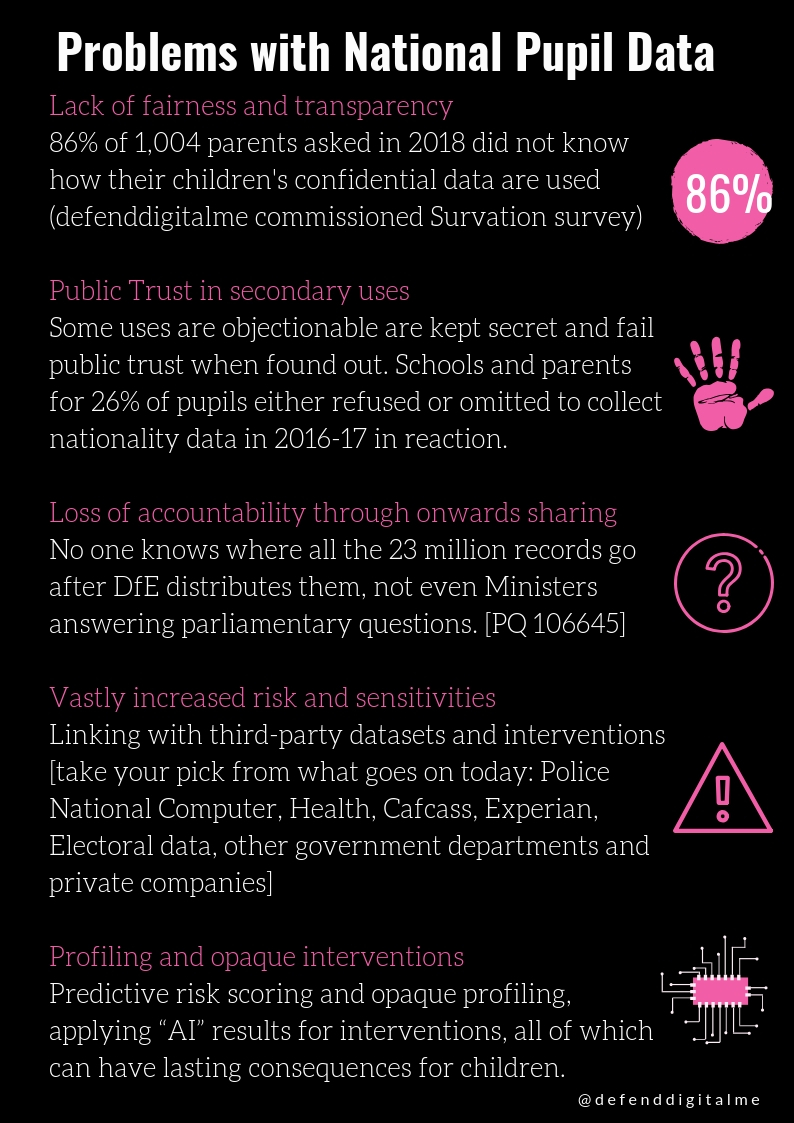

I am conscious wearing my other hat of defenddigitalme, that not all families are the same, and not all children have families. Yet it seems counter to conservative values, for a party that places the family traditionally at the centre of policy, to leave out or abdicate parents of responsibility for their children’s actions and care online.

Parental responsibility cannot be outsourced to tech companies, or accept it’s too hard to police our children’s phones. If we as parents are concerned about harms, it is our responsibility to enable access to that which is not, and be aware and educate ourselves and our children on what is. We are aware of what they read in books. I cast an eye over what they borrow or buy. I play a supervisory role.

Brutal as it may be, the Internet is not responsible for suicide. It’s just not that simple. We cannot bring children back from the dead. We certainly can as society and policy makers, try and create the conditions that harms are not normalised, and do not become more common. And seek to reduce risk. But few would suggest social media is a single source of children’s mental health issues.

What policy makers are trying to regulate is in essence, not a single source of online harms but 2.1 billion users’ online behaviours.

It follows that to see social media as a single source of attributable fault per se, is equally misplaced. A one-size-fits-all solution is going to be flawed, but everyone seems to have accepted its inevitability.

So how will we make the least bad law?

If we are to have sound law that can be applied around what is lawful, we must reduce the substance of debate by removing what is already unlawful and has appropriate remedy and enforcement.

Debate must also try to be free from emotive content and language.

I strongly suspect the language around ‘our way of life’ and ‘values’ in the White Paper comes from the Home Office. So while it sounds fair and just, we must remember reality in the background of TOEIC, of Windrush, of children removed from school because their national records are being misused beyond educational purposes. The Home Office is no friend of child rights, and does not foster the societal values that break down discrimination and harm. It instead creates harms of its own making, and division by design.

I’m going to quote Graham Smith, for I cannot word it better.

“Harms to society, feature heavily in the White Paper, for example: content or activity that:

“threatens our way of life in the UK, either by undermining national security, or by reducing trust and undermining our shared rights, responsibilities and opportunities to foster integration.”

Similarly:

“undermine our democratic values and debate”;

“encouraging us to make decisions that could damage our health, undermining our respect and tolerance for each other and confusing our understanding of what is happening in the wider world.”

This kind of prose may befit the soapbox or an election manifesto, but has no place in or near legislation.”

[Cyberleagle, April 18, 2019,Users Behaving Badly – the Online Harms White Paper]

My key concern in this area is that through a feeling of ‘it is all awful’ stems the sense that ‘all regulation will be better than now’, and comes with a real risk of increasing current practices that would not be better than now, and in fact need fixing.

More monitoring

The first, is today’s general monitoring of school children’s Internet content for risk and harms, which creates unintended consequences and very real harms of its own — at the moment, without oversight.

In yesterday’s House of Lords debate, Lord Haskel, said,

“This is the practicality of monitoring the internet. When the duty of care required by the White Paper becomes law, companies and regulators will have to do a lot more of it. ” [April 30, HOL]

The Brennan Centre yesterday published its research on the spend by US schools purchasing social media monitoring software from 2013-18, and highlighted some of the issues:

“Aside from anecdotes promoted by the companies that sell this software, there is no proof that these surveillance tools work [compared with other practices]. But there are plenty of risks. In any context, social media is ripe for misinterpretation and misuse.” [Brennan Centre for Justice, April 30, 209]

That monitoring software focuses on two things —

a) seeing children through the lens of terrorism and extremism, and b) harms caused by them to others, or as victims of harms by others, or self-harm.

It is the near same list of ‘harms’ topics that the White Paper covers. Co-driven by the same department interested in it in schools — the Home Office.

These concerns are set in the context of the direction of travel of law and policy making, its own loosening of accountability and process.

It was preceded by a House of Commons discussion on Social Media and Health, lead by the former Minister for Digital, Culture, Media and Sport who seems to feel more at home in that sphere, than in health.

His unilateral award of funds to the Samaritans for work with Google and Facebook on a duty of care, while the very same is still under public consultation, is surprising to say the least.

But it was his response to this question, which points to the slippery slope such regulations may lead. The Freedom of Speech champions should be most concerned not even by what is potentially in any legislation ahead, but in the direction of travel and debate around it.

“Will he look at whether tech giants such as Amazon can be brought into the remit of the Online Harms White Paper? ”

He replied, that “Amazon sells physical goods for the most part and surely has a duty of care to those who buy them, in the same way that a shop has a responsibility for what it sells. My hon. Friend makes an important point, which I will follow up.”

Mixed messages

The Center for Democracy and Technology recommended in its 2017 report, Mixed Messages? The Limits of Automated Social Media Content Analysis, that the use of automated content analysis tools to detect or remove illegal content should never be mandated in law.

Debate so far has demonstrated broad gaps between what is wanted, in knowledge, and what is possible. If behaviours are to be stopped because they are undesirable rather than unlawful, we open up a whole can of worms if not done with the greatest attention to detail.

Lord Stevenson and Lord McNally both suggested that pre-legislative scrutiny of the Bill, and more discussion would be positive. Let’s hope it happens.

Here’s my personal first reflections on the Online Harms White Paper discussion so far.

Six suggestions:

Suggestion one:

The Law Commission Review, mentioned in the House of Lords debate, may provide what I have been thinking of crowd sourcing and now may not need to. A list of laws that the Online Harms White Paper related discussion reaches into, so that we can compare what is needed in debate versus what is being sucked in. We should aim to curtail emotive discussion of broad risk and threat that people experience online. This would enable the themes which are already covered in law to be avoided, and focus on the gaps. It would make for much tighter and more effective legislation. For example, the Crown Prosecution Service offers Guidelines on prosecuting cases involving communications sent via social media, but a wider list of law is needed.

Suggestion two:

After (1) defining what legislation is lacking, definitions must be very clear, narrow, and consistent across other legislation. Not for the regulator to determine ad-hoc and alone.

Suggestion three:

If children’s rights are at to be so central in discussion on this paper, then their wider rights must including privacy and participation, access to information and freedom of speech must be included in debate. This should include academic research-based evidence of children’s experience online when making the regulations.

Suggestion four:

Internet surveillance software in schools should be publicly scrutinised. A review should establish the efficacy, boundaries and oversight of policy and practice regards Internet monitoring for harms and not embed even more, without it. Boundaries should be put into legislation for clarity and consistency.

Suggestion five:

Terrorist activity or child sexual exploitation and abuse (CSEA) online are already unlawful and should not need additional Home Office powers. Great caution must be exercised here.

Suggestion six:

Legislation could and should encapsulate accountability and oversight for micro-targeting and algorithmic abuse.

More detail behind my thinking, follows below, after the break. [Structure rearranged on May 14, 2019]

Continue reading Thoughts on the Online Harms White Paper (I)

![Building Public Trust in care.data sharing [1]: Seven step summary to a new approach](https://jenpersson.com/wp-content/uploads/2015/07/edison1-672x372.jpg)