This blog post is also available as an audio file on soundcloud.

What constitutes the public interest must be set in a universally fair and transparent ethics framework if the benefits of research are to be realised – whether in social science, health, education and more – that framework will provide a strategy to getting the pre-requisite success factors right, ensuring research in the public interest is not only fit for the future, but thrives. There has been a climate change in consent. We need to stop talking about barriers that prevent datasharing and start talking about the boundaries within which we can.

What is the purpose for which I provide my personal data?

‘We use math to get you dates’, says OkCupid’s tagline.

That’s the purpose of the site. It’s the reason people log in and create a profile, enter their personal data and post it online for others who are looking for dates to see. The purpose, is to get a date.

When over 68K OkCupid users registered for the site to find dates, they didn’t sign up to have their identifiable data used and published in ‘a very large dataset’ and onwardly re-used by anyone with unregistered access. The users data were extracted “without the express prior consent of the user […].”

Are the registration consent purposes compatible with the purposes to which the researcher put the data should be a simple enough question. Are the research purposes what the person signed up to, or would they be surprised to find out their data were used like this?

Questions the “OkCupid data snatcher”, now self-confessed ‘non-academic’ researcher, thought unimportant to consider.

But it appears in the last month, he has been in good company.

Google DeepMind, and the Royal Free, big players who do know how to handle data and consent well, paid too little attention to the very same question of purposes.

The boundaries of how the users of OkCupid had chosen to reveal information and to whom, have not been respected in this project.

Nor were these boundaries respected by the Royal Free London trust that gave out patient data for use by Google DeepMind with changing explanations, without clear purposes or permission.

The legal boundaries in these recent stories appear unclear or to have been ignored. The privacy boundaries deemed irrelevant. Regulatory oversight lacking.

The respectful ethical boundaries of consent to purposes, disregarding autonomy, have indisputably broken down, whether by commercial org, public body, or lone ‘researcher’.

Research purposes

The crux of data access decisions is purposes. What question is the research to address – what is the purpose for which the data will be used? The intent by Kirkegaard was to test:

“the relationship of cognitive ability to religious beliefs and political interest/participation…”

In this case the question appears intended rather a test of the data, not the data opened up to answer the test. While methodological studies matter, given the care and attention [or self-stated lack thereof] given to its extraction and any attempt to be representative and fair, it would appear this is not the point of this study either.

The data doesn’t include profiles identified as heterosexual male, because ‘the scraper was’. It is also unknown how many users hide their profiles, “so the 99.7% figure [identifying as binary male or female] should be cautiously interpreted.”

“Furthermore, due to the way we sampled the data from the site, it is not even representative of the users on the site, because users who answered more questions are overrepresented.” [sic]

The paper goes on to say photos were not gathered because they would have taken up a lot of storage space and could be done in a future scraping, and

“other data were not collected because we forgot to include them in the scraper.”

The data are knowingly of poor quality, inaccurate and incomplete. The project cannot be repeated as ‘the scraping tool no longer works’. There is an unclear ethical or peer review process, and the research purpose is at best unclear. We can certainly give someone the benefit of the doubt and say intent appears to have been entirely benevolent. It’s not clear what the intent was. I think it is clearly misplaced and foolish, but not malevolent.

The trouble is, it’s not enough to say, “don’t be evil.” These actions have consequences.

When the researcher asserts in his paper that, “the lack of data sharing probably slows down the progress of science immensely because other researchers would use the data if they could,” in part he is right.

Google and the Royal Free have tried more eloquently to say the same thing. It’s not research, it’s direct care, in effect, ignore that people are no longer our patients and we’re using historical data without re-consent. We know what we’re doing, we’re the good guys.

However the principles are the same, whether it’s a lone project or global giant. And they’re both wildly wrong as well. More people must take this on board. It’s the reason the public interest needs the Dame Fiona Caldicott review published sooner rather than later.

Just because there is a boundary to data sharing in place, does not mean it is a barrier to be ignored or overcome. Like the registration step to the OkCupid site, consent and the right to opt out of medical research in England and Wales is there for a reason.

We’re desperate to build public trust in UK research right now. So to assert that the lack of data sharing probably slows down the progress of science is misplaced, when it is getting ‘sharing’ wrong, that caused the lack of trust in the first place and harms research.

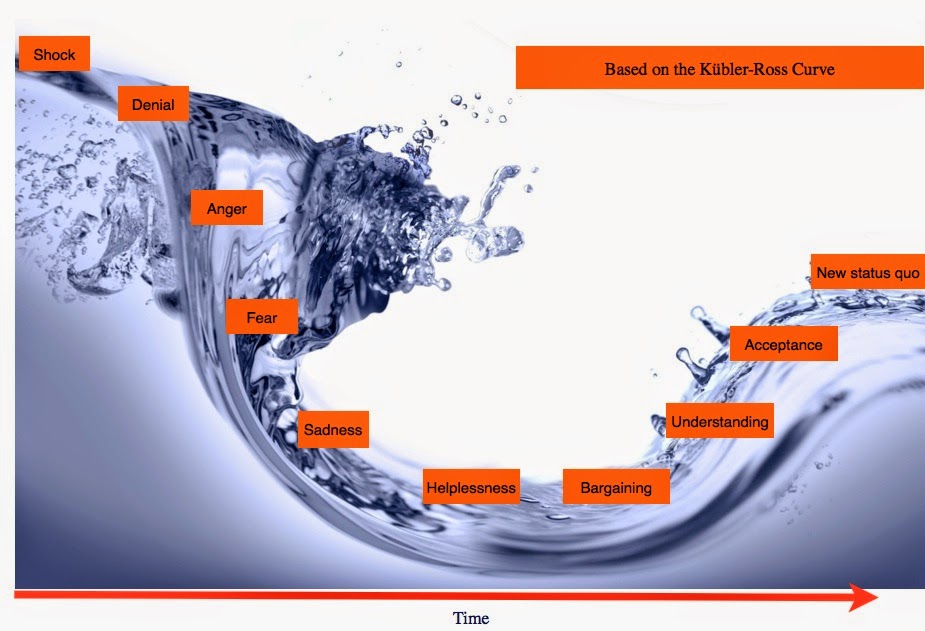

A climate change in consent

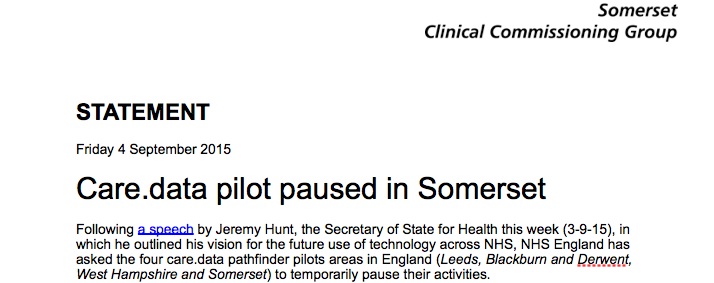

There has been a climate change in public attitude to consent since care.data, clouded by the smoke and mirrors of state surveillance. It cannot be ignored. The EUGDPR supports it. Researchers may not like change, but there needs to be an according adjustment in expectations and practice.

Without change, there will be no change. Public trust is low. As technology advances and if we continue to see commercial companies get this wrong, we will continue to see public trust falter unless broken things get fixed. Change is possible for the better. But it has to come from companies, institutions, and people within them.

Like climate change, you may deny it if you choose to. But some things are inevitable and unavoidably true.

There is strong support for public interest research but that is not to be taken for granted. Public bodies should defend research from being sunk by commercial misappropriation if they want to future-proof public interest research.

The purpose for which the people gave consent are the boundaries within which you have permission to use data, that gives you freedom within its limits, to use the data. Purposes and consent are not barriers to be overcome.

If research is to win back public trust developing a future proofed, robust ethical framework for data science must be a priority today.

Commercial companies must overcome the low levels of public trust they have generated in the public to date if they ask ‘trust us because we’re not evil‘. If you can’t rule out the use of data for other purposes, it’s not helping. If you delay independent oversight it’s not helping.

This case study and indeed the Google DeepMind recent episode by contrast demonstrate the urgency with which working out what common expectations and oversight of applied ethics in research, who gets to decide what is ‘in the public interest’ and data science public engagement must be made a priority, in the UK and beyond.

Boundaries in the best interest of the subject and the user

Society needs research in the public interest. We need good decisions made on what will be funded and what will not be. What will influence public policy and where needs attention for change.

To do this ethically, we all need to agree what is fair use of personal data, when is it closed and when is it open, what is direct and what are secondary uses, and how advances in technology are used when they present both opportunities for benefit or risks to harm to individuals, to society and to research as a whole.

The potential benefits of research are potentially being compromised for the sake of arrogance, greed, or misjudgement, no matter intent. Those benefits cannot come at any cost, or disregard public concern, or the price will be trust in all research itself.

In discussing this with social science and medical researchers, I realise not everyone agrees. For some, using deidentified data in trusted third party settings poses such a low privacy risk, that they feel the public should have no say in whether their data are used in research as long it’s ‘in the public interest’.

For the DeepMind researchers and Royal Free, they were confident even using identifiable data, this is the “right” thing to do, without consent.

For the Cabinet Office datasharing consultation, the parts that will open up national registries, share identifiable data more widely and with commercial companies, they are convinced it is all the “right” thing to do, without consent.

How can researchers, society and government understand what is good ethics of data science, as technology permits ever more invasive or covert data mining and the current approach is desperately outdated?

Who decides where those boundaries lie?

“It’s research Jim, but not as we know it.” This is one aspect of data use that ethical reviewers will need to deal with, as we advance the debate on data science in the UK. Whether independents or commercial organisations. Google said their work was not research. Is‘OkCupid’ research?

If this research and data publication proves anything at all, and can offer lessons to learn from, it is perhaps these three things:

Who is accredited as a researcher or ‘prescribed person’ matters. If we are considering new datasharing legislation, and for example, who the UK government is granting access to millions of children’s personal data today. Your idea of a ‘prescribed person’ may not be the same as the rest of the public’s.

Researchers and ethics committees need to adjust to the climate change of public consent. Purposes must be respected in research particularly when sharing sensitive, identifiable data, and there should be no assumptions made that differ from the original purposes when users give consent.

Data ethics and laws are desperately behind data science technology. Governments, institutions, civil, and all society needs to reach a common vision and leadership how to manage these challenges. Who defines these boundaries that matter?

How do we move forward towards better use of data?

Our data and technology are taking on a life of their own, in space which is another frontier, and in time, as data gathered in the past might be used for quite different purposes today.

The public are being left behind in the game-changing decisions made by those who deem they know best about the world we want to live in. We need a say in what shape society wants that to take, particularly for our children as it is their future we are deciding now.

How about an ethical framework for datasharing that supports a transparent public interest, which tries to build a little kinder, less discriminating, more just world, where hope is stronger than fear?

Working with people, with consent, with public support and transparent oversight shouldn’t be too much to ask. Perhaps it is naive, but I believe that with an independent ethical driver behind good decision-making, we could get closer to datasharing like that.

That would bring Better use of data in government.

Purposes and consent are not barriers to be overcome. Within these, shaped by a strong ethical framework, good data sharing practices can tackle some of the real challenges that hinder ‘good use of data’: training, understanding data protection law, communications, accountability and intra-organisational trust. More data sharing alone won’t fix these structural weaknesses in current UK datasharing which are our really tough barriers to good practice.

How our public data will be used in the public interest will not be a destination or have a well defined happy ending, but it is a long term process which needs to be consensual and there needs to be a clear path to setting out together and achieving collaborative solutions.

While we are all different, I believe that society shares for the most part, commonalities in what we accept as good, and fair, and what we believe is important. The family sitting next to me have just counted out their money and bought an ice cream to share, and the staff gave them two. The little girl is beaming. It seems that even when things are difficult, there is always hope things can be better. And there is always love.

Even if some might give it a bad name.

********

img credit: flickr/sofi01/ Beauty and The Beast under creative commons

![Building Public Trust [2]: a detailed approach to understanding Public Trust in data sharing](https://jenpersson.com/wp-content/uploads/2015/03/Trust-e1426443553441-672x372.jpg)