“A new, a vast, and a powerful language is developed for the future use of analysis, in which to wield its truths so that these may become of more speedy and accurate practical application for the purposes of mankind than the means hitherto in our possession have rendered possible.” [on Ada Lovelace, The First tech Visionary, New Yorker, 2013]

What would Ada Lovelace have argued for in today’s AI debates? I think she may have used her voice not only to call for the good use of data analysis, but for her second strength.The power of her imagination.

James Ball recently wrote in The European [1]:

“It is becoming increasingly clear that the modern political war isn’t one against poverty, or against crime, or drugs, or even the tech giants – our modern political era is dominated by a war against reality.”

My overriding take away from three days spent at the Conservative Party Conference this week, was similar. It reaffirmed the title of a school debate I lost at age 15, ‘We only believe what we want to believe.’

James writes that it is, “easy to deny something that’s a few years in the future“, and that Conservatives, “especially pro-Brexit Conservatives – are sticking to that tried-and-tested formula: denying the facts, telling a story of the world as you’d like it to be, and waiting for the votes and applause to roll in.”

These positions are not confined to one party’s politics, or speeches of future hopes, but define perception of current reality.

I spent a lot of time listening to MPs. To Ministers, to Councillors, and to party members. At fringe events, in coffee queues, on the exhibition floor. I had conversations pressed against corridor walls as small press-illuminated swarms of people passed by with Queen Johnson or Rees-Mogg at their centre.

In one panel I heard a primary school teacher deny that child poverty really exists, or affects learning in the classroom.

In another, in passing, a digital Minister suggested that Pupil Referral Units (PRU) are where most of society’s ills start, but as a Birmingham head wrote this week, “They’ll blame the housing crisis on PRUs soon!” and “for the record, there aren’t gang recruiters outside our gates.”

This is no tirade on failings of public policymakers however. While it is easy to suspect malicious intent when you are at, or feel, the sharp end of policies which do harm, success is subjective.

It is clear that an overwhelming sense of self-belief exists in those responsible, in the intent of any given policy to do good.

Where policies include technology, this is underpinned by a self re-affirming belief in its power. Power waiting to be harnessed by government and the public sector. Even more appealing where it is sold as a cost-saving tool in cash strapped councils. Many that have cut away human staff are now trying to use machine power to make decisions. Some of the unintended consequences of taking humans out of the process, are catastrophic for human rights.

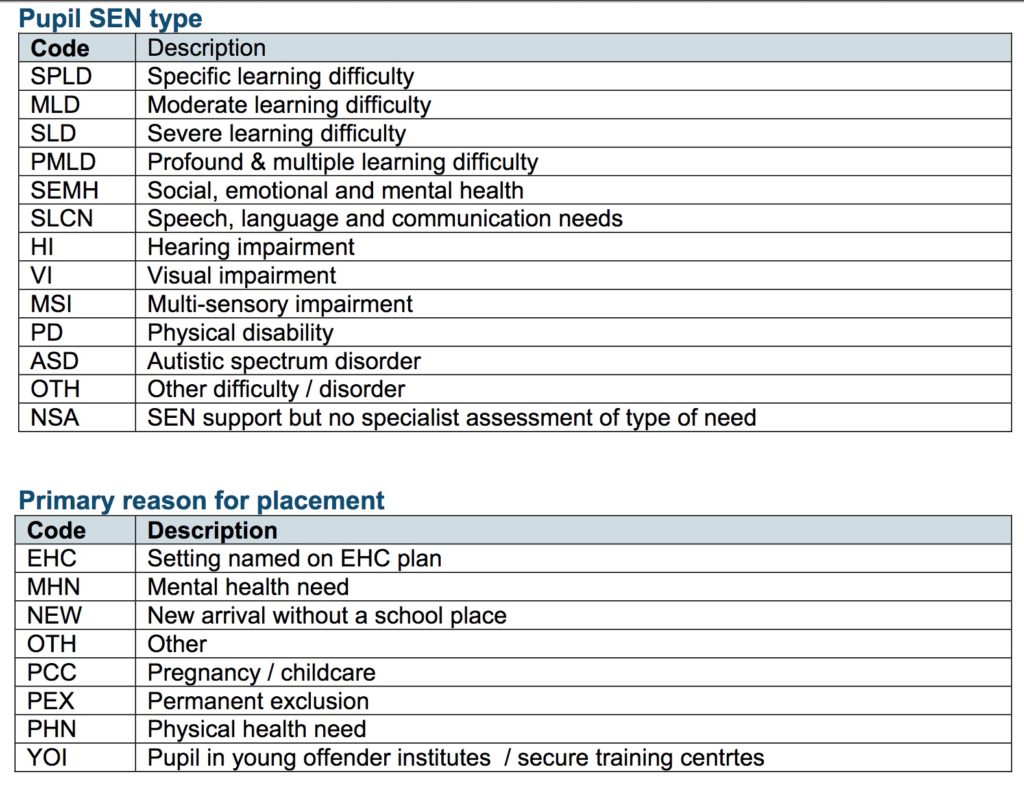

Sweeping human assumptions behind such thinking on social issues and their causes, are becoming hard coded into algorithmic solutions that involve identifying young people who are in danger of becoming involved in crime using “risk factors” such as truancy, school exclusion, domestic violence and gang membership.

The disconnect between perception of risk, the reality of risk, and real harm, whether perceived or felt from these applied policies in real-life, is not so much, ‘easy to deny something that’s a few years in the future‘ as Ball writes, but a denial of the reality now.

Concerningly, there is lack of imagination of what real harms look like.There is no discussion where sometimes these predictive policies have no positive, or even a negative effect, and make things worse.

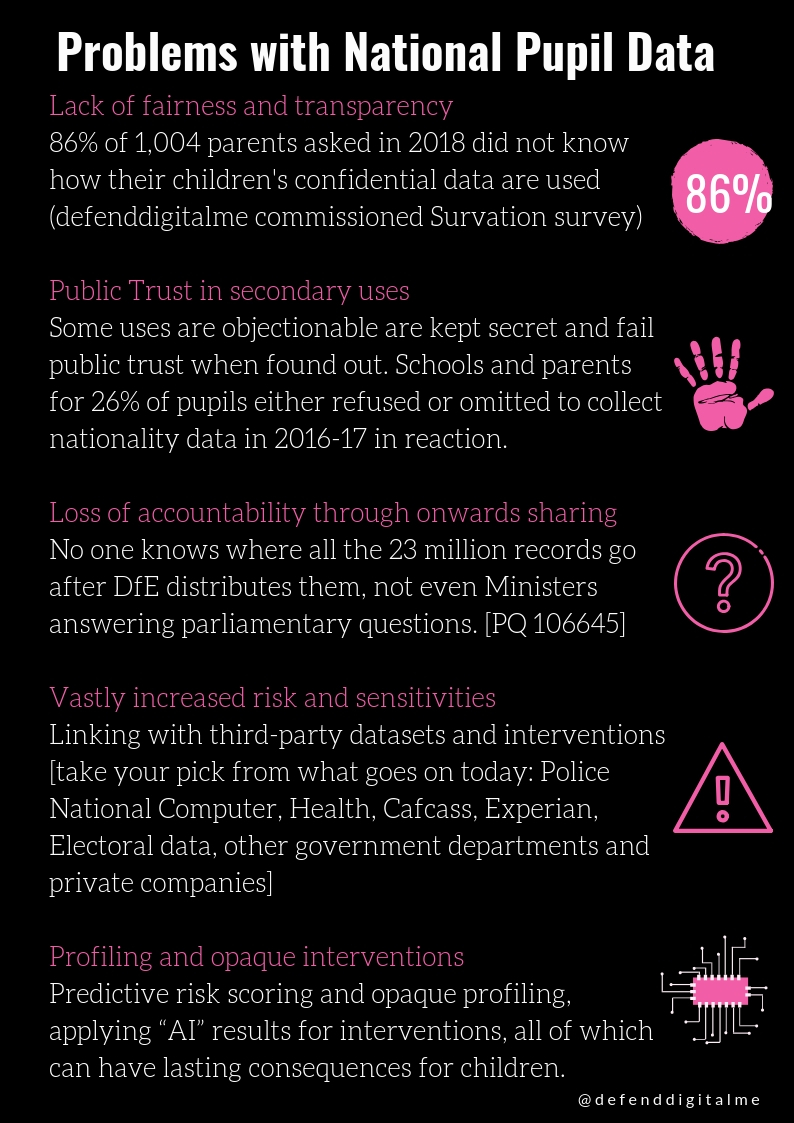

I’m deeply concerned that there is an unwillingness to recognise any failures in current data processing in the public sector, particularly at scale, and where it regards the well-known poor quality of administrative data. Or to be accountable for its failures.

Harms, existing harms to individuals, are perceived as outliers. Any broad sweep of harms across policy like Universal Credit, seem perceived as political criticism, which makes the measurable failures less meaningful, less real, and less necessary to change.

There is a worrying growing trend of finger-pointing exclusively at others’ tech failures instead. In particular, social media companies.

Imagination and mistaken ideas are reinforced where the idea is plausible, and shared. An oft heard and self-affirming belief was repeated in many fora between policymakers, media, NGOs regards children’s online safety. “There is no regulation online”. In fact, much that applies offline applies online. The Crown Prosecution Service Social Media Guidelines is a good place to start. [2] But no one discusses where children’s lives may be put at risk or less safe, through the use of state information about them.

Policymakers want data to give us certainty. But many uses of big data, and new tools appear to do little more than quantify moral fears, and yet still guide real-life interventions in real-lives.

Child abuse prediction, and school exclusion interventions should not be test-beds for technology the public cannot scrutinise or understand.

In one trial attempting to predict exclusion, this recent UK research project in 2013-16 linked children’s school records of 800 children in 40 London schools, with Metropolitan Police arrest records of all the participants. It found interventions created no benefit, and may have caused harm. [3]

“Anecdotal evidence from the EiE-L core workers indicated that in some instances schools informed students that they were enrolled on the intervention because they were the “worst kids”.”

“Keeping students in education, by providing them with an inclusive school environment, which would facilitate school bonds in the context of supportive student–teacher relationships, should be seen as a key goal for educators and policy makers in this area,” researchers suggested.

But policy makers seem intent to use systems that tick boxes, and create triggers to single people out, with quantifiable impact.

Some of these systems are known to be poor, or harmful.

When it comes to predicting and preventing child abuse, there is concern with the harms in US programmes ahead of us, such as both Pittsburgh, and Chicago that has scrapped its programme.

The Illinois Department of Children and Family Services ended a high-profile program that used computer data mining to identify children at risk for serious injury or death after the agency’s top official called the technology unreliable, and children still died.

“We are not doing the predictive analytics because it didn’t seem to be predicting much,” DCFS Director Beverly “B.J.” Walker told the Tribune.

Many professionals in the UK share these concerns. How long will they be ignored and children be guinea pigs without transparent error rates, or recognition of the potential harmful effects?

Helen Margetts, Director of the Oxford Internet Institute and Programme Director for Public Policy at the Alan Turing Institute, suggested at the IGF event this week, that stopping the use of these AI in the public sector is impossible. We could not decide that, “we’re not doing this until we’ve decided how it’s going to be.” It can’t work like that.” [45:30]

Why on earth not? At least for these high risk projects.

How long should children be the test subjects of machine learning tools at scale, without transparent error rates, audit, or scrutiny of their systems and understanding of unintended consequences?

Is harm to any child a price you’re willing to pay to keep using these systems to perhaps identify others, while we don’t know?

Is there an acceptable positive versus negative outcome rate?

The evidence so far of AI in child abuse prediction is not clearly showing that more children are helped than harmed.

Surely it’s time to stop thinking, and demand action on this.

It doesn’t take much imagination, to see the harms. Safe technology, and safe use of data, does not prevent the imagination or innovation, employed for good.

If we continue to ignore views from Patrick Brown, Ruth Gilbert, Rachel Pearson and Gene Feder, Charmaine Fletcher, Mike Stein, Tina Shaw and John Simmonds I want to know why.

Where you are willing to sacrifice certainty of human safety for the machine decision, I want someone to be accountable for why.

References

[1] James Ball, The European, Those waging war against reality are doomed to failure, October 4, 2018.

[2] Thanks to Graham Smith for the link. “Social Media – Guidelines on prosecuting cases involving communications sent via social media. The Crown Prosecution Service (CPS) , August 2018.”

[3] Obsuth, I., Sutherland, A., Cope, A. et al. J Youth Adolescence (2017) 46: 538. https://doi.org/10.1007/s10964-016-0468-4 London Education and Inclusion Project (LEIP): Results from a Cluster-Randomized Controlled Trial of an Intervention to Reduce School Exclusion and Antisocial Behavior (March 2016)