Last week the DCMS announced that consultation on changes to Data Protection laws is coming soon.

- UK announces intention for new multi-billion pound global data partnerships with the US, Australia and Republic of Korea

- International privacy expert John Edwards named as preferred new Information Commissioner to oversee shake-up

- Consultation to be launched shortly to look at ways to increase trade and innovation through data regime.

The Telegraph reported, “Mr Dowden argues that combined, they will enable Britain to set the “gold standard” in data regulation, “but do so in a way that is as light touch as possible”.

It’s an interesting mixture of metaphors. What is a gold standard? What is light touch? These rely on assumptions in the reader to assume meaning, but don’t convey any actual content. Whether there will be substantive changes or not, we need to wait for the full announcement this month.

Oliver Dowden’s recent briefing to the Telegraph (August 25) was not the first trailer for changes that are yet to be announced. He wrote in the FT in February this year, that, “the UK has an opportunity to be at the forefront of global, data-driven growth,” and it looks like he has tried to co-opt the rights’ framing as his own. …”the beginning of a new era in the UK — one where we start asking ourselves not just whether we have the right to use data, but whether, given its potential for good, we have the right not to.”

There was nothing more on that in this week’s announcement, but the focus was on international trade. The Government says it is prioritising six international agreements with “the US, Australia, Colombia, Singapore, South Korea and Dubai…but in the future it also intends to target the world’s fastest growing economies, among them, India, Brazil, Kenya and Indonesia.” (my bold)

Notably absent from the ‘fastest growing’ among them mentions’ list is China. What those included in the list have in common, is that they are countries not especially renowned for protecting human rights.

Human rights like privacy. The GDPR and in turn the UK-GDPR recognised that rights matter. Data Protection is not designed in other regimes to be about prioritising the protection of rights but harmonisation of data in trade, and that may be where we are headed. If so, it would be out of step with how the digital environment has changed since those older laws were seen as satisfactory. But weren’t. And the reason why the EU countries moved towards both better harmonisation *and* rights protection.

At the same time, while data protection laws increasingly align towards a high interoperable and global standard, data sovereignty and protectionism is growing too where transfers to the US remain unprotected from government surveillance.

Some countries are establishing stricter rules on the cross-border transfer of personal information, in the name of digital sovereignty, security or business growth. such as Hessen’s decision on Microsoft and “bring the data home” moves to German-based data centres.

In the big focus on data-for-trade post-Brexit fire sale, the DCMS appears to be ignoring these risks of data distribution, despite having a good domestic case study on its doorstep in 2020. The Department for Education has been giving data away sensitive pupil data since 2012. Millions of people, including my own children, have no idea where it’s gone. The lack of respect for current law makes me wonder how I will trust that our own government, and those others we trade with, will respect our rights and risks in future trade deals.

Dowden complains in the Telegraph about the ICO that, “you don’t know if you have done something wrong until after you’ve done it”. Isn’t that the way that enforcement usually works? Should the 2019-20 ICO audit have turned a blind eye to the Department for Education lack of prioritisation of the rights of the named records of over 21 million pupils? Don’t forget even gambling companies had access to learners’ records of which the Department for Education claimed to be unaware. To be ignorant of law that applies to you, is a choice.

Dowden claims the changes will enable Britain to set the “gold standard” in data regulation. It’s an ironic analogy to use, since the gold standard while once a measure of global trust between countries, isn’t used by any country today. Our government sold off our physical gold over 20 years ago, after being the centre of the global gold market for over 300 years. The gold standard is a meaningless thing of the past that sounds good. A true international gold standard existed for fewer than 50 years (1871 to 1914). Why did we even need it? Because we needed a consistent trusted measure of monetary value, backed by trust in a commodity. “We have gold because we cannot trust governments,” President Herbert Hoover famously said in 1933 in his statement to Franklin D. Roosevelt. The gold standard was all about trust.

At defenddigitalme we’ve very recently been talking with young people about politicians’ use of language in debating national data policy. Specifically, data metaphors. They object to being used as the new “oil” to “power 21st century Britain” as Dowden described it.

A sustainable national data strategy must respect human rights to be in step with what young people want. It must not go back to old-fashioned data laws only shaped by trade and not also by human rights; laws that are not fit for purpose even in the current digital environment. Any national strategy must be forward-thinking. It otherwise wastes time in what should be an urgent debate.

In fact, such a strategy is the wrong end of the telescope from which to look at personal data at all— government should be focussing on the delivery of quality public services to support people’s interactions with the State and managing the administrative data that comes out of digital services as a by-product and externality. Accuracy. Interoperability. Registers. Audit. Rights’ management infrastructure. Admin data quality is quietly ignored while we package it up hoping no one will notice it’s really. not. good.

Perhaps Dowden is doing nothing innovative at all. If these deals are to be about admin data given away in international trade deals he is simply continuing a long tradition of selling off the family silver. The government may have got to the point where there is little left to sell. The question now would be whose family does it come from?

To use another bad metaphor, Dowden is playing with fire here if they don’t fix the issue of the future of trust. Oil and fire don’t mix well. Increased data transfers—without meaningful safeguards including minimized data collection to start with—will increase risk, and transfer that risk to you and me.

Risks of a lifetime of identity fraud are not just minor personal externalities in short term trade. They affect nation state security. Digital statecraft. Knowledge of your public services is business intelligence. Loss of trust in data collection creates lasting collective harm to data quality, with additional risk and harm as a result passed on to public health programmes and public interest research.

I’ll wait and see what the details of the plans are when announced. We might find it does little more than package up recommendations on Codes of Practice, Binding Corporate Contracts and other guidance that the EDPB has issued in the last 12 months. But whatever it looks like, so far we are yet to see any intention to put in place the necessary infrastructure of rights management that admin data requires. While we need data registers, those we had have been axed. Few new ones under the Digital Economy Act replaced them. Transparency and controls for people to exercise rights are needed if the government wants our personal data to be part of new deals.

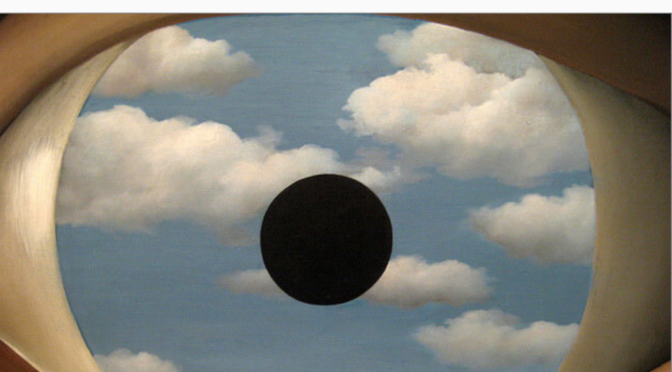

img: René Magritte The False Mirror Paris 1929

=========

Join me at the upcoming lunchtime online event, on September 17th from 13:00 to talk about the effect of policy makers’ language in the context of the National Data Strategy: ODI Fridays: Data is not an avocado – why it matters to Gen Z https://theodi.org/event/odi-fridays-data-is-not-an-avocado-why-it-matters-to-gen-z/