Notes [and my thoughts] from the Women Leading in AI launch event of the Ten Principles of Responsible AI report and recommendations, February 6, 2019.

Speakers included Ivana Bartoletti (GemServ), Jo Stevens MP, Professor Joanna J Bryson, Lord Tim Clement-Jones, Roger Taylor (Centre for Data Ethics and Innovation, Chair), Sue Daley (techUK), Reema Patel, Nuffield Foundation and Ada Lovelace Institute.

Challenging the unaccountable and the ‘inevitable’ is the title of the conclusion of the Women Leading in AI report Ten Principles of Responsible AI, launched this week, and this makes me hopeful.

“There is nothing inevitable about how we choose to use this disruptive technology. […] And there is no excuse for failing to set clear rules so that it remains accountable, fosters our civic values and allows humanity to be stronger and better.”

Ivana Bartoletti, co-founder of Women Leading in AI, began the event, hosted at the House of Commons by Jo Stevens, MP for Cardiff Central, and spoke brilliantly of why it matters right now.

Everyone’s talking about ethics, she said, but it has limitations. I agree with that. This was by contrast very much a call to action.

It was nearly impossible not to cheer, as she set out without any of the usual bullshit, the reasons why we need to stop “churning out algorithms which discriminate against women and minorities.”

Professor took up multiple issues, such as why

- innovation, ‘flashes in the pan’ are not sustainable and not what we’re looking for things in that work for us [society].

- The power dynamics of data, noting Facebook, Google et al are global assets, and are also global problems, and flagged the UK consultation on taxation open now.

- And that it is critical that we do not have another nation with access to all of our data.

She challenged the audience to think about the fact that inequality is higher now than it has been since World War I. That the rich are getting richer and that imbalance of not only wealth, but of the control individuals have in their own lives, is failing us all.

This big picture thinking while zooming in on detailed social, cultural, political and tech issues, fascinated me most that evening. It frustrated the man next to me apparently, who said to me at the end, ‘but they haven’t addressed anything on the technology.’

[I wondered if that summed up neatly, some of why fixing AI cannot be a male dominated debate. Because many of these issues for AI, are not of the technology, but of people and power.]

Jo Stevens, MP for Cardiff Central, hosted the event and was candid about politicians’ level of knowledge and the need to catch up on some of what matters in the tech sector.

We grapple with the speed of tech, she said. We’re slow at doing things and tech moves quickly. It means that we have to learn quickly.

While discussing how regulation is not something AI tech companies should fear, she suggested that a constructive framework whilst protecting society against some of the problems we see is necessary and just, because self-regulation has failed.

She talked about their enquiry which began about “fake news” and disinformation, but has grown to include:

- wider behavioural economics,

- how it affects democracy.

- understanding the power of data.

- disappointment with social media companies, who understand the power they have, and fail to be accountable.

She wants to see something that changes the way big business works, in the way that employment regulation challenged exploitation of the workforce and unsafe practices in the past.

The bias (conscious or unconscious) and power imbalance has some similarity with the effects on marginalised communities — women, BAME, disabilities — and she was looking forward to see the proposed solutions, and welcomed the principles.

Lord Clement-Jones, as Chair of the Select Committee on Artificial Intelligence, picked up the values they had highlighted in the March 2018 report, Artificial Intelligence, AI in the UK: ready, willing and able?

Right now there are so many different bodies, groups in parliament and others looking at this [AI / Internet / The Digital World] he said, so it was good that the topic is timely, front and centre with a focus on women, diversity and bias.

He highlighted, the importance of maintaining public trust. How do you understand bias? How do you know how algorithms are trained and understand the issues? He fessed up to being a big fan of DotEveryone and their drive for better ‘digital understanding’.

[Though sometimes this point is over complicated by suggesting individuals must understand how the AI works, the consensus of the evening was common sensed — and aligned with the Working Party 29 guidance — that data controllers must ensure they explain clearly and simply to individuals, how the profiling or automated decision-making process works, and what its effect is for them.]

The way forward he said includes:

- Designing ethics into algorithms up front.

- Data audits need to be diverse in order to embody fairness and diversity in the AI.

- Questions of the job market and re-skilling.

- The enforcement of ethical frameworks.

He also asked how far bodies will act, in different debates. Deciding who decides on that is still a debate to be had.

For example, aware of the social credit agenda and scoring in China, we should avoid the same issues. He also agreed with Joanna, that international cooperation is vital, and said it is important that we are not disadvantaged in this global technology. He expected that we [the Government Office for AI] will soon promote a common set of AI ethics, at the G20.

Facial recognition and AI are examples of areas that require regulation for safe use of the tech and to weed out those using it for the wrong purposes, he suggested.

However, on regulation he held back. We need to be careful about too many regulators he said. We’ve got the ICO, FCA, CMA, OFCOM, you name it, we’ve already got it, and they risk tripping over one another. [What I thought as CDEI was created para 31.]

We [the Lords Committee] didn’t suggest yet another regulator for AI, he said and instead the CDEI should grapple with those issues and encourage ethical design in micro-targeting for example.

Roger Taylor (Chair of the CDEI), — after saying it felt as if the WLinAI report was like someone had left their homework on his desk, — supported the concept of the WLinAI principles are important, and agreed it was time for practical things, and what needs done.

Can our existing regulators do their job, and cover AI? he asked, suggesting new regulators will not be necessary. Bias he rightly recognised, already exists in our laws and bodies with public obligations, and in how AI is already operating;

What evidence is needed, what process is required, what is needed to assure that we know how it is actually operating? Who gets to decide to know if this is fair or not? While these are complex decisions, they are ultimately not for technicians, but a decision for society, he said.

[So far so good.]

Then he made some statements which were rather more ambiguous. The standards expected of the police will not be the same as those for marketeers micro targeting adverts at you, for example.

[I wondered how and why.]

Start up industries pay more to Google and Facebook than in taxes he said.

[I wondered how and why.]

When we think about a knowledge economy, the output of our most valuable companies is increasingly ‘what is our collective truth? Do you have this diagnosis or not? Are you a good credit risk or not? Even who you think you are — your identity will be controlled by machines.’

What can we do as one country [to influence these questions on AI], in what is a global industry? He believes, a huge amount. We are active in the financial sector, the health service, education, and social care — and while we are at the mercy of large corporations, even large corporations obey the law, he said.

[Hmm, I thought, considering the Google DeepMind-Royal Free agreement that didn’t, and venture capitalists not renowned for their ethics, and yet advise on some of the current data / tech / AI boards. I am sceptical of corporate capture in UK policy making.]

The power to use systems to nudge our decisions, he suggested, is one that needs careful thought. The desire to use the tech to help make decisions is inbuilt into what is actually wrong with the technology that enables us to do so. [With this I strongly agree, and there is too little protection from nudge in data protection law.]

The real question here is, “What is OK to be owned in that kind of economy?” he asked.

This was arguably the neatest and most important question of the evening, and I vigorously agreed with him asking it, but then I worry about his conclusion in passing, that he was, “very keen to hear from anyone attempting to use AI effectively, and encountering difficulties because of regulatory structures.“

[And unpopular or contradictory a view as it may be, I find it deeply ethically problematic for the Chair of the CDEI to be held by someone who had a joint-venture that commercially exploited confidential data from the NHS without public knowledge, and its sale to the Department of Health was described by the Public Accounts Committee, as a “hole and corner deal”. That was the route towards care.data, that his co-founder later led for NHS England. The company was then bought by Telstra, where Mr Kelsey went next on leaving NHS Engalnd. The whole commodification of confidentiality of public data, without regard for public trust, is still a barrier to sustainable UK data policy.]

Sue Daley (Tech UK) agreed this year needs to be the year we see action, and the report is a call to action on issues that warrant further discussion.

- Business wants to do the right thing, and we need to promote it.

- We need two things — confidence and vigilance.

- We’re not starting from scratch, and talked about GDPR as the floor not the ceiling. A starting point.

[I’m not quite sure what she was after here, but perhaps it was the suggestion that data regulation is fundamental in AI regulation, with which I would agree.]

What is the gap that needs filled she asked? Gap analysis is what we need next and avoid duplication of effort —need to avoid complexity and duplicity of work with other bodies. If we can answer some of the big, profound questions need to be addressed to position the UK as the place where companies want to come to.

Sue was the only speaker that went on to talk about the education system that needs to frame what skills are needed for a future world for a generation, ‘to thrive in the world we are building for them.’

[The Silicon Valley driven entrepreneur narrative that the education system is broken, is not an uncontroversial position.]

She finished with the hope that young people watching BBC icons the night before would see, Alan Turing [winner of the title] and say yes, I want to be part of that.

Listening to Reema Patel, representative of the Ada Lovelace Institute, was the reason I didn’t leave early and missed my evening class. Everything she said resonated, and was some of the best I have heard in the recent UK debate on AI.

- Civic engagement, the role of the public is as yet unclear with not one homogeneous, but many publics.

- The sense of disempowerment is important, with disconnect between policy and decisions made about people’s lives.

- Transparency and literacy are key.

- Accountability is vague but vital.

- What does the social contract look like on people using data?

- Data may not only be about an individual and under their own responsibility, but about others and what does that mean for data rights, data stewardship and articulation of how they connect with one another, which is lacking in the debate.

- Legitimacy; If people don’t believe it is working for them, it won’t work at all.

- Ensuring tech design is responsive to societal values.

2018 was a terrible year she thought. Let’s make 2019 better. [Yes!]

Comments from the floor and questions included Professor Noel Sharkey, who spoke about the reasons why it is urgent to act especially where technology is unfair and unsafe and already in use. He pointed to Compass (Durham police), and predictive policing using AI and facial recognition, with 5% accuracy, and that the Met was not taking these flaws seriously. Liberty produced a strong report on it out this week.

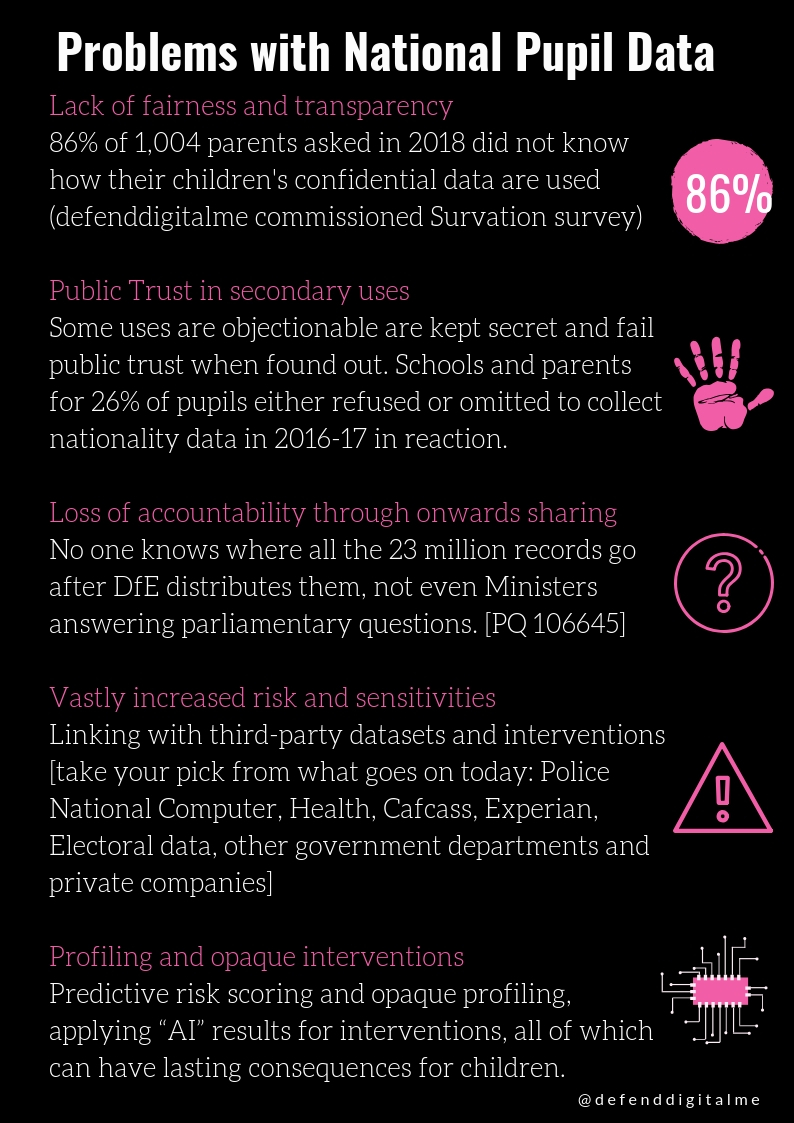

Caroline, from Women in AI echoed my own comments on the need to get urgent review in place of these technologies used with children in education and social care. [in particular where used for prediction of child abuse and interventions in family life].

added to the conversation on accountability, to say people are not following existing software and audit protocols, someone just needs to go and see if people did the right thing.

The basic question of accountability, is to ask if any flaw is the fault of a corporation, of due diligence, or of the users of the tool? Telling people that this is the same problem as any other software, makes it much easier to find solutions to accountability.

Tim Clement-Jones asked, on how many fronts can we fight on at the same time? If government has appeared to exempt itself from some of these issues, and created a weak framework for itself on handing data, in the Data Protection Act — critically he also asked, is the ICO adequately enforcing on government and public accountability, at local and national levels?

Sue Daley also reminded us that politicians need not know everything, but need to know what the right questions are to be asking? What are the effects that this has on my constituents, in employment, my family? And while she also suggested that not using the technology could be unethical, a participant countered that it’s not the worst the thing to have to slow technology down and ensure it is safe before we all go along with it.

My takeaways of the evening, included that there is a very large body of women, of whom attendees were only a small part, who are thinking, building and engineering solutions to some of these societal issues embedded in policy, practice and technology. They need heard.

It was genuinely electric and empowering, to be in a room dominated by women, women reflecting diversity of a variety of publics, ages, and backgrounds, and who listened to one another. It was certainly something out of the ordinary.

There was a subtle but tangible tension on whether or not regulation beyond what we have today is needed.

While regulating the human behaviour that becomes encoded in AI, we need to ensure ethics of human behaviour, reasonable expectations and fairness are not conflated with the technology [ie a question of, is AI good or bad] but how it is designed, trained, employed, audited, and assess whether it should be used at all.

This was the most effective group challenge I have heard to date, counter the usual assumed inevitability of a mythical omnipotence. Perhaps Julia Powles, this is the beginnings of a robust, bold, imaginative response.

Why there’s not more women or people from minorities working in the sector, was a really interesting if short, part of the discussion. Why should young women and minorities want to go into an environment that they can see is hostile, in which they may not be heard, and we still hold *them* responsible for making work work?

And while there were many voices lamenting the skills and education gaps, there were probably fewer who might see the solution more simply, as I do. Schools are foreshortening Key Stage 3 by a year, replacing a breadth of subjects, with an earlier compulsory 3 year GCSE curriculum which includes RE, and PSHE, but means that at 12, many children are having to choose to do GCSE courses in computer science / coding, or a consumer-style iMedia, or no IT at all, for the rest of their school life. This either-or content, is incredibly short-sighted and surely some blend of non-examined digital skills should be offered through to 16 to all, at least in parallel importance with RE or PSHE.

I also still wonder, about all that incredibly bright and engaged people are not talking about and solving, and missing in policy making, while caught up in AI. We need to keep thinking broadly, and keep human rights at the centre of our thinking on machines. Anaïs Nin wrote over 70 years ago about the risks of growth in technology to expand our potential for connectivity through machines, but diminish our genuine connectedness as people.

“I don’t think the [American] obsession with politics and economics has improved anything. I am tired of this constant drafting of everyone, to think only of present day events”.

And as I wrote about nearly 3 years ago, we still seem to have no vision for sustainable public policy on data, or establishing a social contract for its use as Reema said, which underpins the UK AI debate. Meanwhile, the current changing national public policies in England on identity and technology, are becoming catastrophic.

Challenging the unaccountable and the ‘inevitable’ in today’s technology and AI debate, is an urgent call to action.

I look forward to hearing how Women Leading in AI plan to make it happen.

References:

Women Leading in AI website: http://womenleadinginai.org/

WLiAI Report: 10 Principles of Responsible AI

@WLinAI #WLinAI

image credits

post: creative commons Mark Dodds/Flickr

event photo: / GemServ