What is means to be human is going to be different. That was the last word of a panel of four excellent speakers, and the sparkling wit and charm of chair Timandra Harkness, at tonight’s Turing Institute event, hosted at the British Library, on the future of data.

The first speaker, Bernie Hogan, of the Oxford Internet Institute, spoke of Facebook’s emotion experiment, and the challenges of commercial companies ownership and concentrations of knowledge, as well as their decisions controlling what content you get to see.

He also explained simply what an API is in human terms. Like a plug in a socket and instead of electricity, you get a flow of data, but the data controller can control which data can come out of the socket.

And he brilliantly brought in a thought what would it mean to be able to go back in time to the Nuremberg trials, and regulate not only medical ethics, but the data ethics of indirect and computational use of information. How would it affect today’s thinking on AI and machine learning and where we are now?

“Available does not mean accessible, transparent does not mean accountable”

Charles from the Bureau of Investigative Journalism, who had also worked for Trinity Mirror using data analytics, introduced some of the issues that large datasets have for the public.

- People rarely have the means to do any analytics well.

- Even if open data are available, they are not necessarily accessible due to the volume of data to access, and constraints of common software (such as excel) and time constraints.

- Without the facts they cannot go see a [parliamentary] representative or community group to try and solve the problem.

- Local journalists often have targets for the number of stories they need to write, and target number of Internet views/hits to meet.

Putting data out there is only transparency, but not accountability if we cannot turn information into knowledge that can benefit the public.

“Trust, is like personal privacy. Once lost, it is very hard to restore.”

Jonathan Bamford, Head of Parliamentary and Government Affairs at the ICO, took us back to why we need to control data at all. Democracy. Fairness. The balance of people’s rights, like privacy, and Freedom-of-Information, and the power of data holders. The awareness that power of authorities and companies will affect the lives of ordinary citizens. And he said that even early on there was a feeling there was a need to regulate who knows what about us.

The third generation of Data Protection law he said, is now more important than ever to manage the whole new era of technology and use of data that did not exist when previous laws were made.

But, he said, the principles stand true today. Don’t be unfair. Use data for the purposes people expect. Security of data matters. As do rights to see the data people hold about us. Make sure data are relevant, accurate, necessary and kept for a sensible amount of time.

And even if we think that technology is changing, he argued, the principles will stand, and organisations need to consider these principles before they do things, considering privacy as a fundamental human right by default, and data protection by design.

After all, we should remember the Information Commissioner herself recently said,

“privacy does not have to be the price we pay for innovation. The two can sit side by side. They must sit side by side.

It’s not always an easy partnership and, like most relationships, a lot of energy and effort is needed to make it work. But that’s what the law requires and it’s what the public expects.”

“We must not forget, evil people want to do bad things. AI needs to be audited.”

Joanna J. Bryson was brilliant her multifaceted talk, summing up how data will affect our lives. She explained how implicit biases work, and how we reason, make decisions and showed up how we think in some ways in Internet searches. She showed in practical ways, how machine learning is shaping our future in ways we cannot see. And she said, firms asserting that doing these things fairly and openly and that regulation no longer fits new tech, “is just hoo-hah”.

She talked about the exciting possibilities and good use of data, but that , “we must not forget, evil people want to do bad things. AI needs to be audited.” She summed up, we will use data to predict ourselves. And she said:

“What is means to be human is going to be different.”

That is perhaps the crux of this debate. How do data and machine learning and its mining of massive datasets, and uses for ‘prediction’, affect us as individual human beings, and our humanity?

The last audience question addressed inequality. Solutions like transparency, subject access, accountability, and understanding biases and how we are used, will never be accessible to all. It needs a far greater digital understanding across all levels of society. How can society both benefit from and be involved in the future of data in public life? The conclusion was made, that we need more faith in public institutions working for people at scale.

But what happens when those institutions let people down, at scale?

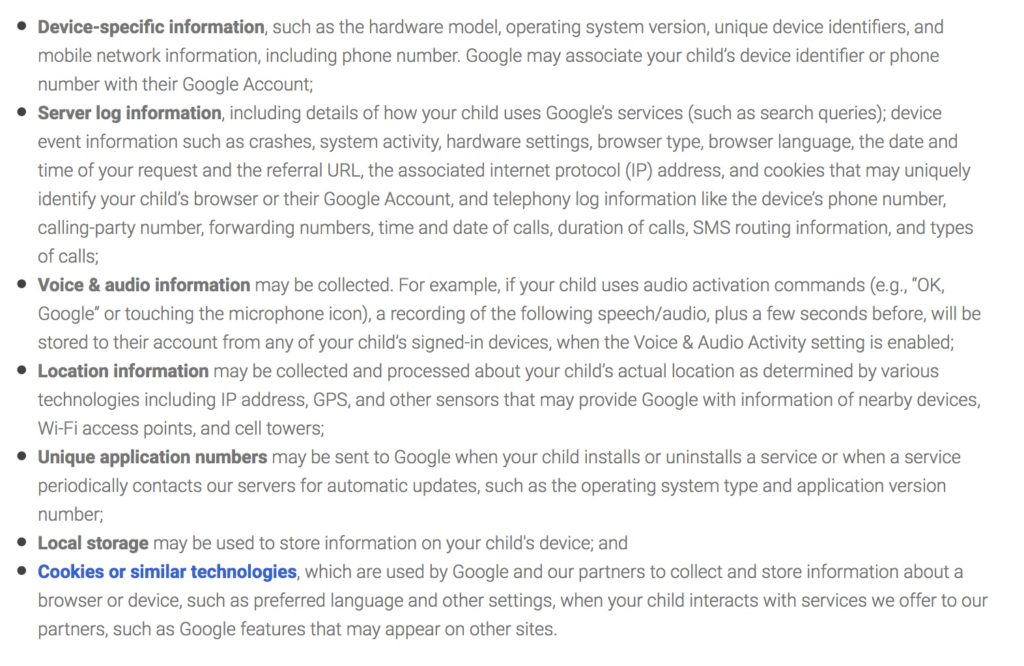

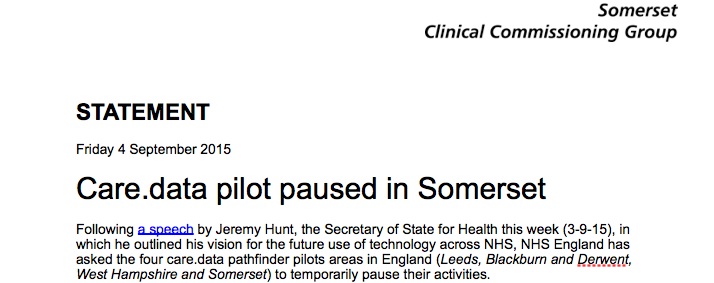

And some institutions do let us down. Such as over plans for how our NHS health data will be used. Or when our data are commercialised without consent breaking data protection law. Why do 23 million people not know how their education data are used? The government itself does not use our data in ways we expect, at scale. School children’s data used in immigration enforcement fails to be fair, is not the purpose for which it was collected, and causes harm and distress when it is used in direct interventions including “to effect removal from the UK”, and “create a hostile environment.” There can be a lack of committment to independent oversight in practice, compared to what is promised by the State. Or no oversight at all after data are released. And ethics in researchers using data are inconsistent.

The debate was less about the Future of Data in Public Life, and much more about how big data affects our personal lives. Most of the discussion was around how we understand the use of our personal information by companies and institutions, and how will we ensure democracy, fairness and equality in future.

The question went unanswered from an audience member, how do we protect ourselves from the harms we cannot see, or protect the most vulnerable who are least able to protect themselves?

“How can we future proof data protection legislation and make sure it keeps up with innovation?”

That audience question is timely given the new Data Protection Bill. But what legislation means in practice, I am learning rapidly, can be very different from what is in the written down in law.

One additional tool in data privacy and rights legislation is up for discussion, right now, in the UK. If it matters to you, take action.

NGOs could be enabled to make complaints on behalf of the public under article 80 of the General Data Protection Regulation (GDPR). However, the government has excluded that right from the draft UK Data Protection Bill launched last week.

“Paragraph 53 omits from Article 80, representation of data subjects, where provided for by Member State law” from paragraph 1 and paragraph 2,” [Data Protection Bill Explanatory notes, paragraph 681 p84/112]. 80 (2) gives members states the option to provide for NGOs to take action independently on behalf of many people that may have been affected.

If you want that right, a right others will be getting in other countries in the EU, then take action. Call your MP or write to them. Ask for Article 80, the right to representation, in UK law. We need to ensure that our human rights continue to be enacted and enforceable to the maximum, if, “what is means to be human is going to be different.”

For the Future of Data, has never been more personal.

![Building Public Trust [5]: Future solutions for health data sharing in care.data](https://jenpersson.com/wp-content/uploads/2014/04/caredatatimeline-637x372.jpg)