The Lords Select Committee report on AI in the UK in March 2018, suggested that,“the Government plans to adopt the Hall-Pesenti Review recommendation that ‘data trusts’ be established to facilitate the ethical sharing of data between organisations.”

Since data distribution already happens, what difference would a Data Trust model make to ‘ethical sharing‘?

A ‘set of relationships underpinned by a repeatable framework, compliant with parties’ obligations’ seems little better than what we have today, with all its problems including deeply unethical policy and practice.

The ODI set out some of the characteristics Data Trusts might have or share. As importantly, we should define what Data Trusts are not. They should not simply be a new name for pooling content and a new single distribution point. Click and collect.

But is a Data Trust little more than a new description for what goes on already? Either a physical space or legal agreements for data users to pass around the personal data from the unsuspecting, and sometimes unwilling, public. Friends-with-benefits who each bring something to the party to share with the others?

As with any communal risk, it is the standards of the weakest link, the least ethical, the one that pees in the pool, that will increase reputational risk for all who take part, and spoil it for everyone.

Importantly, the Lords AI Committee report recognised that there is an inherent risk how the public would react to Data Trusts, because there is no social license for this new data sharing.

“Under the current proposals, individuals who have their personal data contained within these trusts would have no means by which they could make their views heard, or shape the decisions of these trusts.”

Views those keen on Data Trusts seem keen to ignore.

When the Administrative Data Research Network was set up in 2013, a new infrastructure for “deidentified” data linkage, extensive public dialogue was carried across across the UK. It concluded in a report with very similar findings as was apparent at dozens of care.data engagement events in 2014-15;

There is not public support for

- “Creating large databases containing many variables/data from a large number of public sector sources,

- Establishing greater permanency of datasets,

- Allowing administrative data to be linked with business data, or

- Linking of passively collected administrative data, in particular geo-location data”

The other ‘red-line’ for some participants was allowing “researchers for private companies to access data, either to deliver a public service or in order to make profit. Trust in private companies’ motivations were low.”

All of the above could be central to Data Trusts. All of the above highlight that in any new push to exploit personal data, the public must not be the last to know. And until all of the above are resolved, that social-license underpinning the work will always be missing.

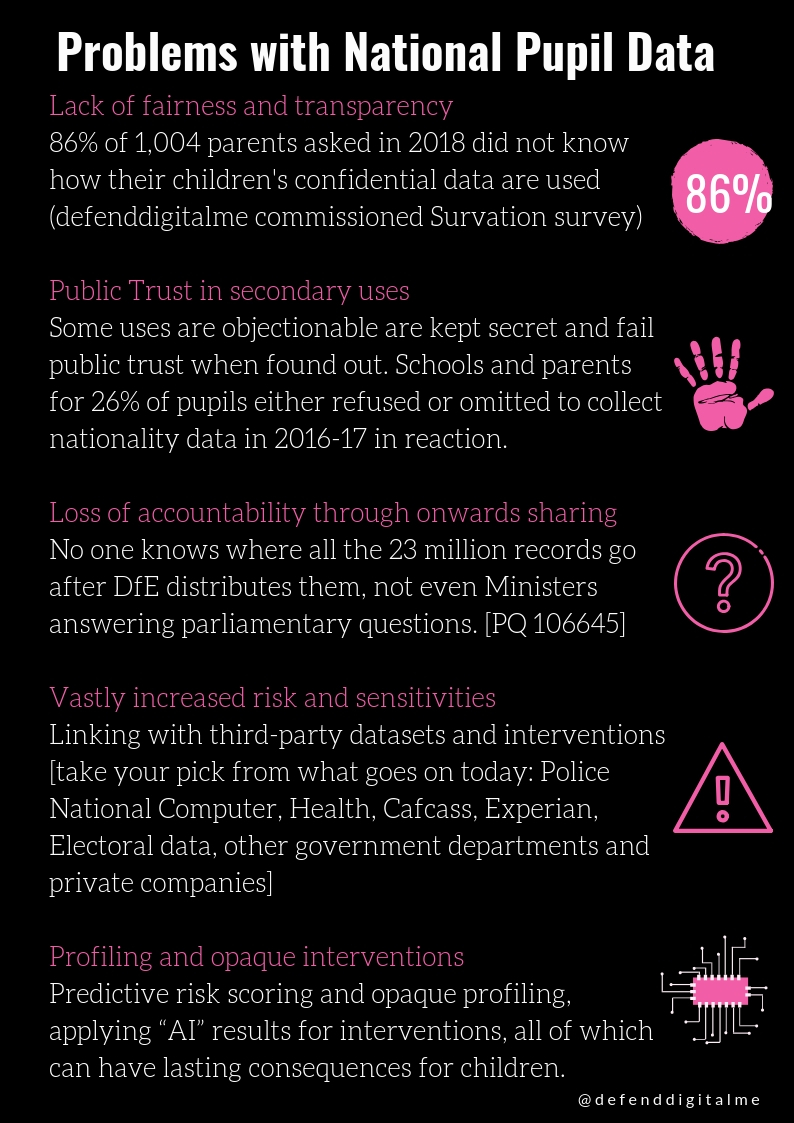

Take the National Pupil Database (NPD) as a case study in a Data Trust done wrong.

It is a mega-database of over 20 other datasets. Raw data has been farmed out for years under terms and conditions to third parties, including users who hold an entire copy of the database, such as the somewhat secretive and unaccountable Fischer Family Trust, and others, who don’t answer to Freedom-of-Information, and whose terms are hidden under commercial confidentilaity. Buying and benchmarking data from schools and selling it back to some, profiling is hidden from parents and pupils, yet the FFT predictive risk scoring can shape a child’s school experience from age 2. They don’t really want to answer how staff tell if a child’s FFT profile and risk score predictions are accurate, or of they can spot errors or a wrong data input somewhere.

Even as the NPD moves towards risk reduction, its issues remain. When will children be told how data about them are used?

Is it any wonder that many people in the UK feel a resentment of institutions and orgs who feel entitled to exploit them, or nudge their behaviour, and a need to ‘take back control’?

It is naïve for those working in data policy and research to think that it does not apply to them.

We already have safe infrastructures in the UK for excellent data access. What users are missing, is the social license to do so.

Some of today’s data uses are ethically problematic.

No one should be talking about increasing access to public data, before delivering increased public understanding. Data users must get over their fear of what if the public found out.

If your data use being on the front pages would make you nervous, maybe it’s a clue you should be doing something differently. If you don’t trust the public would support it, then perhaps it doesn’t deserve to be trusted. Respect individuals’ dignity and human rights. Stop doing stupid things that undermine everything.

Build the social license that care.data was missing. Be honest. Respect our right to know, and right to object. Build them into a public UK data strategy to be understood and be proud of.

Part 1. Ethically problematic

Ethics is dissolving into little more than a buzzword. Can we find solutions underpinned by law, and ethics, and put the person first?

Part 2. Can Data Trusts be trustworthy?

As long as data users ignore data subjects rights, Data Trusts have no social license.