“By when will NHS England commit to respect the 700,000 objections [1] to secondary data sharing already logged* but not enacted?” [gathered from objections to secondary uses in the care.data rollout, Feb 2014*]

Until then, can organisations continue to use health data held by HSCIC for secondary purposes, ethically and legally, or are they placing themselves at reputational risk?

If HSCIC continues to share, what harm may it do to public confidence in data sharing in the NHS?

I should have asked this explicitly of the National Information Board (NIB) June 17th board meeting [2], that rode in for the last 3 hours of the two day Digital Health and Care Congress at the King’s Fund.

But I chose to mention it only in passing, since I assumed it is already being worked on and a public communication will follow very soon. I had lots of other constructive things I wanted to hear in the time planned for ‘public discussion’.

Since then it’s been niggling at me that I should have asked more directly, as it dawned on me watching the meeting recording and more importantly when reading the NIB papers [3], it’s not otherwise mentioned. And there was no group discussion anyway.

Mark Davies. Director at UK Department of Health talked in fairly jargon-free language about transparency. [01:00] I could have asked him when we will see more of it in practice?

Importantly, he said on building and sustaining public trust, “if we do not secure public trust in the way that we collect store and use their personal confidential data, then pretty much everything we do today will not be a success.”

So why does the talk of securing trust seem at odds with the reality?

Evidence of Public Voice on Opt Out

Is the lack of action based on uncertainty over what to do?

Mark Davies also said “we have only a sense” and we don’t have “a really solid evidence base” of what the public want. He said, “people feel slightly uncomfortable about data being used for commercial gain.” Which he felt was “awkward” as commercial companies included pharma working for public good.

If he has not done so already, though I am sure he will have, he could read the NHS England own care.data listening feedback. People were strongly against commercial exploitation of data. Many were livid about its use. [see other care.data events] Not ‘slightly uncomfortable.’ And they were able to make a clear distinction between uses by commercial companies they felt in the public interest, such as bona fide pharma research and the differences with consumer market research, even if by the same company. Risk stratification and commissioning does not need, and should not have according to the Caldicott Review [8], fully identifiable individual level data sharing.

Uses are actually not so hard to differentiate. In fact, it’s exactly what people want. To have the choice to have their data used only for direct care or to choose to permit sharing between different users, permitting say, bona fide research. Or at minimum, possible to exclude commercially exploitative uses and reuse. To enable this would enable more data sharing with confidence.

I’d also suggest there is a significant evidence base gathered in the data trust deficit work from the Royal Statistical Society, a poll on privacy for the Joseph Rowntree Foundation, and work done for the ADRN/ESRC. I’m sure he and the NIB are aware of these projects, and Mark Davies said himself more is currently being done with the Nuffield Trust.

Work with almost 3,000 young for the Royal Academy of Engineering people confirmed what those interested in privacy know, but is the opposite of what is often said about young people and privacy – they care and want control:

NHS England has itself further said it has held ‘over 180’ listening events in 2014 and feedback was consistent with public letters to papers, radio phone-ins and news reports in spring 2014.

Don’t give raw data out, exclude access to commercial companies not working in the public interest, exclude non-bona fide research use and re-use licenses, define the future purposes, improve legal protection including the opt out and provide transparency to trust.

How much more evidence does anyone need to have of public understanding and feeling, or is it simply that NHS England and the DH don’t like the answers given? Listening does not equal heard.

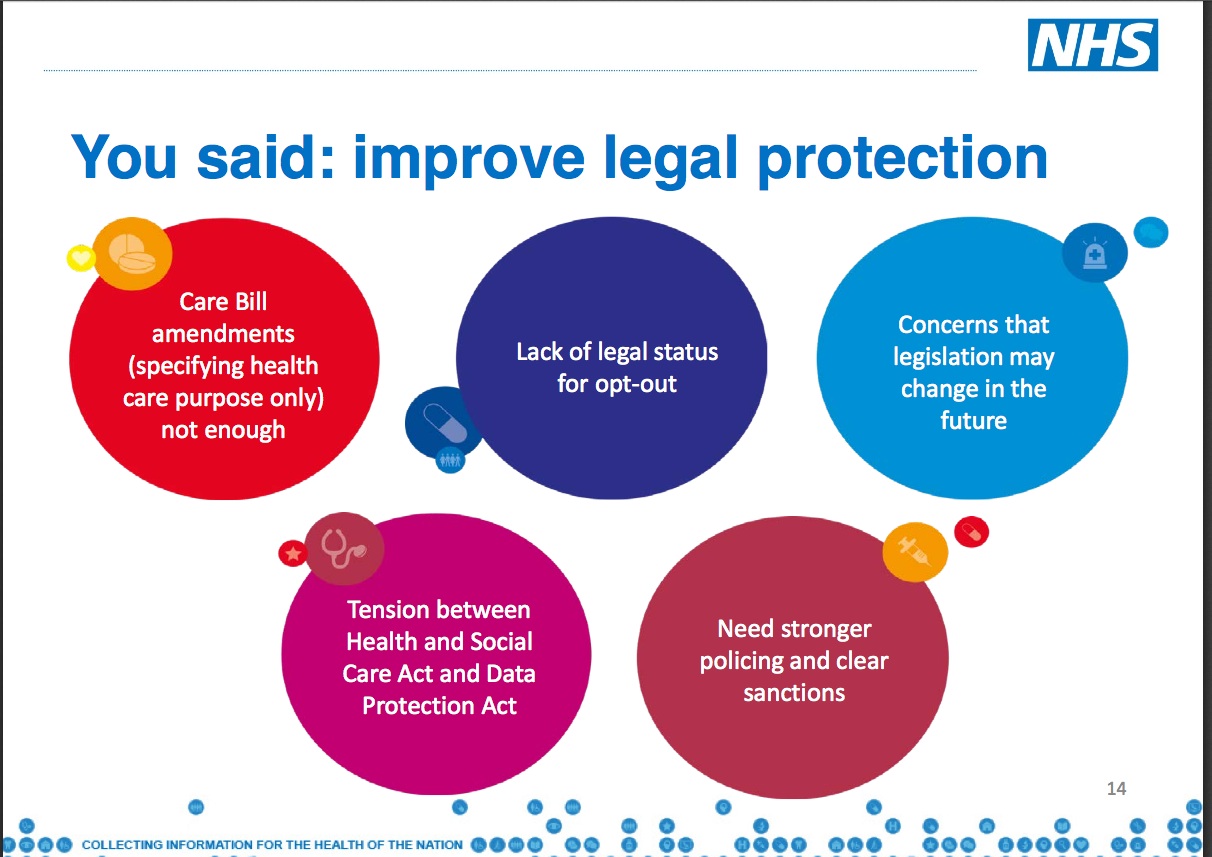

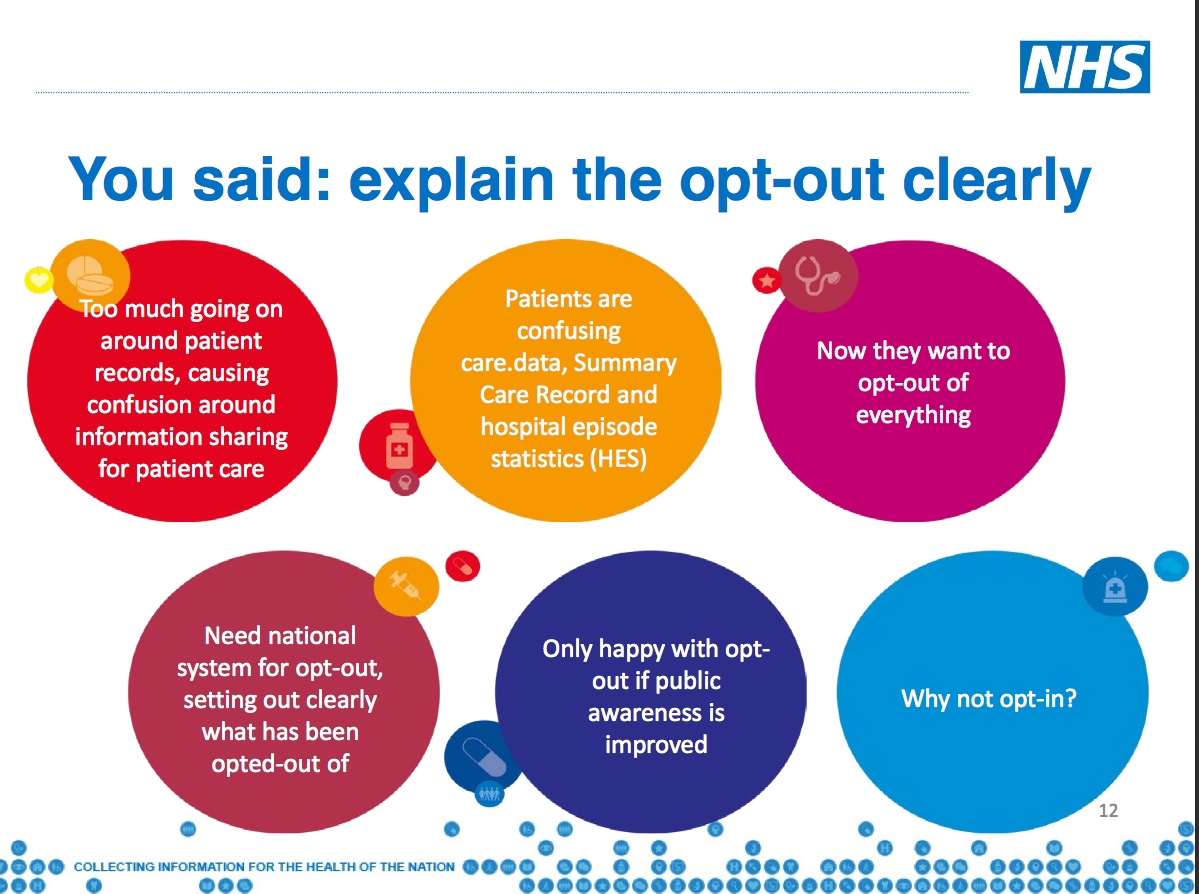

Here’s some of NHS England’s own slides – [4] points included a common demand from the public to give the opt out legal status:

Opt out needs legal status

Paul Bate talked about missing pieces of understanding on secondary uses, for [56:00] [3] “Commissioners, researchers, all the different regulators.” He gave an update, which assumed secondary use of data as the norm.

But he missed out any mention of the perceived cost of loss of confidentiality, and loss of confidence since the failure to respect the 9nu4 objections made in the 2014 aborted care.data rollout. That’s not even mentioning that so many did not even recall getting a leaflet, so those 700,00K came from the most informed.

When the public sees their opt out is not respected they lose trust in the whole system of data sharing. Whether for direct care, for use by an NHS organisation, or by any one of the many organisations vying to manage their digital health interaction and interventions. If someone has been told data will not be shared with third parties and it is, why would they trust any other governance will be honoured?

By looking back on the leadership pre- care.data flawed thinking ‘no one who uses a public service should be allowed to opt out of sharing their records, nor can people rely on their record being anonymised’ and its resulting disastrous attempt to rollout without communication and then a second at fair processing, lessons learned should inform future projects. That includes care.data mark 2. This < is simply daft.

“You can object and your data will not be extracted and you can make no contribution to society,“ Mr. Kelsey answered a critic on twitter in 2014 and revealed that his thinking really hasn’t changed very much, even if he has been forced to make concessions. I should have said at #kfdigital15, ignoring what the public wants is not your call to make.

What legal changes will be made that back up the verbal guarantees given since February? If none are forthcoming, then were the statements made to Parliament untrue?

“people should be able to opt out from having their anonymised data used for the purposes of scientific research.” [Hunt, 2014]

We are yet to see this legal change and to date, the only publicly stated choice is only for identifiable data, not all data for secondary purposes including anonymous, as offered by the Minister in February 2014, and David Cameron in 2010.

If Mark Davies is being honest about how important he feels trust is to data sharing, implementing the objection should be a) prioritised and b) given legal footing.

Risks and benefits : need for a new social contract on Data

Simon Denegri recently wrote [5] he believes there are “probably five years to sort out a new social contract on data in the UK.”

I’d suggest less, if high profile data based projects or breaches irreparably damage public trust first, whether in the NHS or consumer world. The public will choose to share increasingly less.

But the public cannot afford to lose the social benefits that those projects may bring to the people who need them.

Big projects, such as care.data, cannot afford for everyone’s sake to continue to repeatedly set off and crash.

Smaller projects, those planned and in progress by each organisation and attendee at the King’s Fund event, cannot afford for those national mistakes to damage the trust the public may otherwise hold in the projects at local level.

I heard care.data mentioned five different times over the two-day event in different projects as having harmed the project through trust or delays. We even heard examples of companies in Scotland going bust due to rollouts with slowed data access and austerity.

Individuals cannot afford for their reputation to be harmed through association, or by using data in ways the public finds unreasonable and get splashed across the front page of the Telegraph.

Clarity is needed for everyone using data well whether for direct care with implied consent, or secondary uses without it, and it is in the public interest to safeguard access to that data.

A new social contract on data would be good all round.

Reputational Risk

The June 6th story of the 700,000 unrespected opt outs has been and gone. But the issue has not.

Can organisations continue to use that data ethically and legally knowing it is explicitly without consent?

“When will those objections be implemented?” should be a question that organisations across the country are asking – if reputational risk is a factor in any datasharing decision making – in addition to the fundamental ethical principle: can we continue to use the data from an individual from whom we know consent was not freely given and was actively withheld?

What of projects that use HES or hospital secondary care sites’ submitted data and rely on the HSCIC POM mechanisms? How do those audits or other projects take HES secondary objections into account?

Sir Nick Partridge said in the April 2014 HSCIC HES/SUS audit there should be ‘no surprises’ in future.

That future is now. What has NHS England done since to improve?

“Consumer confidence appears to be fragile and there are concerns that future changes in how data may be collected and used (such as more passive collection via the Internet of Things) could test how far consumers are willing to continue to provide data.” [CMA Consumer report] [6]

The problem exists across both state and consumer data sharing. It is not a matter of if, but when, these surprises are revealed to the public with unpredictable degrees of surprise and revulsion, resulting in more objection to sharing for any purposes at all.

The solutions exist: meaningful transparency, excluding commercial purposes which appear exploitative, consensual choices, and no surprises. Shape communications processes by building-in future change to today’s programmes to future proof trust.

Future-proofing does not mean making a purpose and use of data so vague as to be all encompassing – exactly what the public has said at care.data listening events they do not want and will not find sufficient to trust nor I would argue, would it meet legally adequate fair processing – it must build and budget for mechanisms into every plan today, to inform patients of the future changes to use or users of data already gathered, and offer them a new choice to object or consent. And they should have a way to know who used what.

The GP who asked the first of the only three questions that were possible in 10 minutes Q&A from the room, had taken away the same as I had: the year 2020 is far too late as a public engagement goal. There must be much stronger emphasis on it now. And it is actually very simple. Do what the public has already asked for.

The overriding lesson must be, the person behind the data must come first. If they object to data being used, that must be respected.

It starts with fixing the opt outs. That must happen. And now.

Public confidence is not a game [7]. Reputational risk is not something organisations should be forced to gamble with to continue their use of data and potential benefits of data sharing.

If NHS England, the NIB or Department of Health know how and when it will be fixed they should say so. If they don’t, they better have a darn good reason why and tell us that too.

‘No surprises’, said Nick Partridge.

The question decision makers must address for data management is, do they continue to be part of the problem or offer part of the solution?

******

References:

[1]The Telegraph, June 6th 2015 http://www.telegraph.co.uk/news/health/news/11655777/Nearly-1million-patients-could-be-having-confidential-data-shared-against-their-wishes.html

[2] June 17th NIB meeting http://www.dh-national-information-board.public-i.tv/core/portal/webcast_interactive/180408

[3] NIB papers / workstream documentation https://www.gov.uk/government/publications/plans-to-improve-digital-services-for-the-health-and-care-sector

[4] care.data listening feedback http://www.england.nhs.uk/wp-content/uploads/2015/01/care-data-presentation.pdf

[5] Simon Denegri’s blog http://simondenegri.com/2015/06/18/is-public-involvement-in-uk-health-research-a-danger-to-itself/

[6] CMA findings on commercial use of consumer data https://www.gov.uk/government/news/cma-publishes-findings-on-the-commercial-use-of-consumer-data

[7] Data trust deficit New research finds data trust deficit with lessons for policymakers: http://www.statslife.org.uk/news/1672-new-rss-research-finds-data-trust-deficit-with-lessons-for-policymakers

[8] Caldicott review: information governance in the health and care system